Are you sure you want to delete this task? Once this task is deleted, it cannot be recovered.

|

|

1 year ago | |

|---|---|---|

| PCGCv1-master | 1 year ago | |

| author results | 2 years ago | |

| pytorch | 1 year ago | |

| results | 2 years ago | |

| tensorlayer | 1 year ago | |

| LICENSE | 1 year ago | |

| Lossy Point Cloud Geometry Compression via End-to-End Learning.pdf | 2 years ago | |

| OpenPointCloud-logo.png | 1 year ago | |

| PCGCv1 explanation.docx | 2 years ago | |

| PCGCv1 performance.xlsx | 2 years ago | |

| README - old.md | 1 year ago | |

| README.md | 1 year ago | |

| TF_PT_TL.xlsx | 1 year ago | |

| bd-result.png | 2 years ago | |

| flowchart.vsdx | 2 years ago | |

| pcgcv1_D1.png | 2 years ago | |

| pcgcv1_D2.png | 2 years ago | |

| test_result.xlsx | 2 years ago | |

README.md

PCGCv1

PCGCv1, point cloud compression, deeplearning, hyper

PCGCv1 is a kind of lossy point cloud compression method. It uses hyper priors to improve performance. The source code uses Tensorflow as deeplearning framework, here we transplant to tensorlayer and pytorch.

Paper Citation:

@ARTICLE{9321375, author={Wang, Jianqiang and Zhu, Hao and Liu, Haojie and Ma, Zhan}, journal={IEEE Transactions on Circuits and Systems for Video Technology}, title={Lossy Point Cloud Geometry Compression via End-to-End Learning}, year={2021}, volume={31}, number={12}, pages={4909-4923}, doi={10.1109/TCSVT.2021.3051377}}

our contributions

1.transplant from TensorFlow to tensorlayer and pytorch.

2.benchmark tests on many PCs, including those not tested by author.

3.BD-BR and BD-PSNR calculation over GPCC(octree), also compared to trisoup.

4.draw a flow chart about encoding process to help better understanding.

5.compare performance between different frameworks.

files

1.trainingset:refer to points64_part1.zip

2.testset:refer to here

3.PCGCv1-master:source code and models on tensorflow

4.pytorch:code and model file on pytorch

5.author results:test results on TF by author

6.tensorlayer:code and model file on tensorlayer

7.Lossy Point Cloud Geometry Compression via End-to-End Learning.pdf:origional paper

8.PCGCv1 performance.xlsx:performance test result between different frameworks

9.PCGCv1 explanation.docx:introduction for paper, migration, instructions

10.flowchart.vsdx:flow chart about encoding process

11.results: test results by contributors

environment

1.pytorch

- ubuntu 18.04

- cuda V10.2.89

- python 3.6.9

- refer to pytorch/requirements-pytorch.txt

2.tensorlayer

- Ubuntu 18.04

- cuda 10.1.243

- python 3.7.6

- tensorflow-gpu 2.3.1

- tensorlayer3 1.2.0

- torch 1.8.1

- torchac 0.9.3

- refer to pytorch/requirements-pytorch.txt

command

1.pytorch

cd pytorch

chmod +x myutils/tmc3

chmod +x myutils/pc_error_d

training:

python train.py

encode:

python test.py --command=compress --input=".../longdress_vox10_1300.ply" --ckpt_dir="ckpts/epoch_13_13599_a6b3.pth" --batch_parallel=64

decode:

python test.py --command=decompress --input="compressed/longdress_vox10_1300" --ckpt_dir="ckpts/epoch_13_13599_a6b3.pth"

evaluate:

python eval.py --input ".../longdress_vox10_1300.ply" --ckpt_dir="ckpts/epoch_13_13599_a6b3.pth"

2.tensorlayer

cd tensorlayer

chmod +x myutils/tmc3

chmod +x myutils/pc_error_d

training:

python train.py

encode:

python test.py --command=compress --input=".../longdress_vox10_1300.ply" --ckpt_dir="ckpts/epoch_30_30349_a6b3.npz" --batch_parallel=4

decode:

python test.py --command=decompress --input="compressed/longdress_vox10_1300" --ckpt_dir="ckpts/epoch_30_30349_a6b3.npz"

evaluate:

python eval.py --input ".../longdress_vox10_1300.ply" --ckpt_dir="ckpts/epoch_30_30349_a6b3.npz"

performance

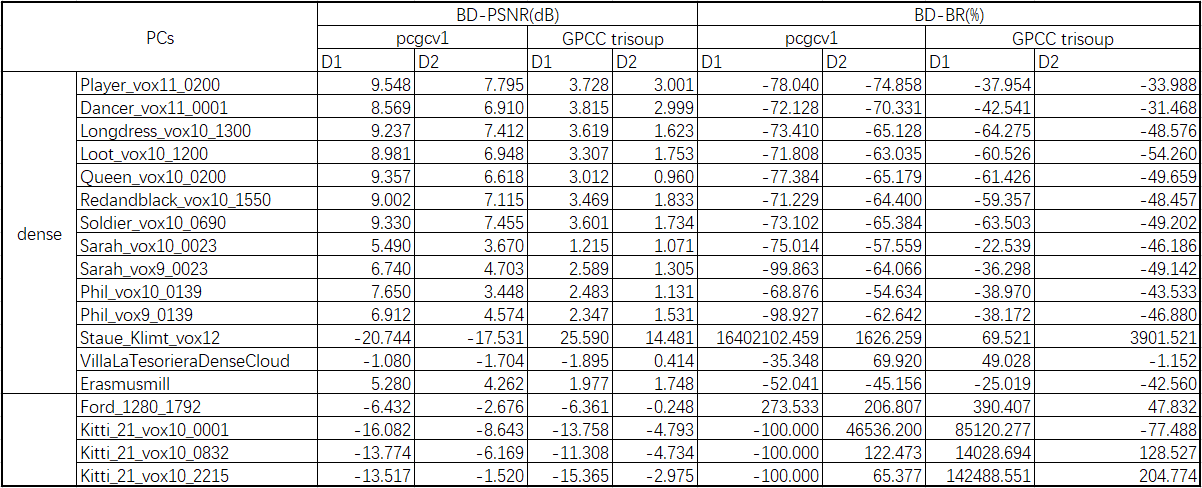

We first caculate the BD-PSNR and BD-BR rate of PCGCv1 and GPCC trisoup over GPCC octree on many PCs, the result shows as below. We can see from the table, that for dense PC with bit width of 10 and 11, PCGCv1 gets better performance over octree and trisoup, while for sparse or vox12 PC, it gets worse. The main reason is that there is no PC data in training sets with similar distributions or geometry features.

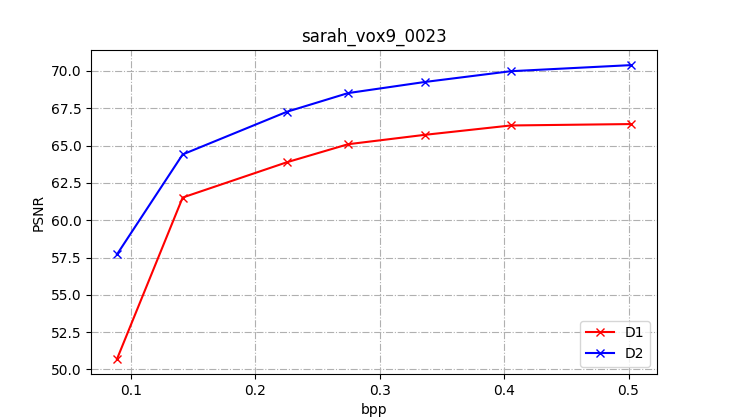

We then carry out a benchmark test on PC files including those are not tested by author. The part of the result shows below. For other results, please go to results/

| PC | ori_points | mseF,PSNR (p2plane) | mseF,PSNR (p2point) | time_enc | time_dec | bpp |

|---|---|---|---|---|---|---|

| sarah_vox9_0023 | 299363 | 57.7055 | 50.695 | 33.071 | 17.38 | 0.0889 |

| sarah_vox9_0023 | 299363 | 64.4054 | 61.5184 | 26.308 | 12.194 | 0.1417 |

| sarah_vox9_0023 | 299363 | 67.2605 | 63.8802 | 27.383 | 12.014 | 0.2254 |

| sarah_vox9_0023 | 299363 | 68.5138 | 65.0884 | 36.251 | 16.083 | 0.2746 |

| sarah_vox9_0023 | 299363 | 69.2561 | 65.7159 | 34.676 | 12.428 | 0.3361 |

| sarah_vox9_0023 | 299363 | 69.9792 | 66.3444 | 32.836 | 12.619 | 0.4053 |

| sarah_vox9_0023 | 299363 | 70.3887 | 66.4396 | 32.061 | 15.015 | 0.5022 |

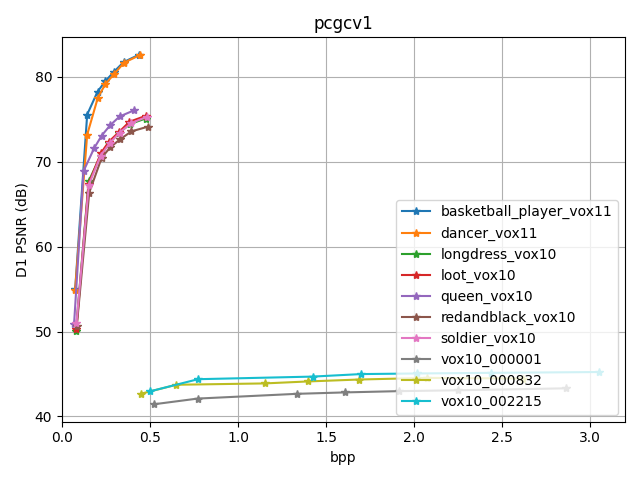

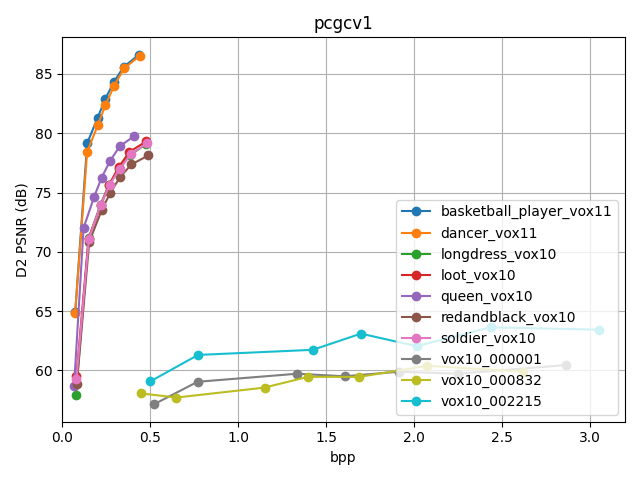

According to the results above, we draw D1-bpp and D2-bpp figures, to clearly show the performance between different PC files as below. It is obvious that, PCs of vox11 have best performance, which are on up-left part of the figure, PCs of vox10 are second in the middle, and PCs of sparse have bad performance on the bottom part.

We also make tests to compare the performances between different frameworks of TensorFlow, PyTorch, TensorLayer on testsets. From the results below, we can see that TF has the biggest bpp and best D1 and D2. Overall, the performance of TF is better than the other two. PCGCv1 is hard to train, as it has hyper-prior structure, especially for pytorch.

| PC file | PT_D2 | PT_D1 | PT_time_enc | PT_time_dec | PT_bpp | TL_D2 | TL_D1 | TL_time_enc | TL_time_dec | TL_bpp | TF_D2 | TF_D1 | TF_time_enc | TF_time_dec | TF_bpp |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| longdress_vox10_1300 | 78.141 | 74.402 | 23.247 | 14.524 | 0.461 | 78.355 | 74.623 | 30.629 | 11.171 | 0.409 | 79.056 | 75.103 | 111.515 | 64.833 | 0.475 |

| loot_vox10_1200 | 78.37 | 74.725 | 23.033 | 15.752 | 0.461 | 78.548 | 74.881 | 28.628 | 11.067 | 0.407 | 79.307 | 75.438 | 93.721 | 47.03 | 0.478 |

| phil_vox9_0139 | 69.506 | 66.086 | 9.933 | 6.691 | 0.437 | 69.949 | 66.342 | 13.689 | 4.218 | 0.404 | 69.841 | 66.004 | 30.855 | 15.075 | 0.49 |

| phil_vox10_0139 | 74.888 | 71.174 | 42.176 | 25.102 | 0.406 | 75.3 | 71.423 | 51.019 | 17.319 | 0.382 | 75.134 | 70.848 | 109.787 | 54.19 | 0.462 |

| queen_vox10_0200 | 78.858 | 75.301 | 25.637 | 14.562 | 0.397 | 79.287 | 75.612 | 33.443 | 10.5 | 0.363 | 79.744 | 76.062 | 84.323 | 42.428 | 0.409 |

| redandblack_vox10_1550 | 77.414 | 73.69 | 21.179 | 13.991 | 0.467 | 77.59 | 73.925 | 26.341 | 9.637 | 0.415 | 78.133 | 74.161 | 73.224 | 39.963 | 0.491 |

| sarah_vox9_0023 | 70.132 | 66.582 | 9.43 | 6.487 | 0.448 | 70.477 | 66.796 | 13.958 | 5.954 | 0.412 | 70.389 | 66.44 | 32.061 | 15.015 | 0.502 |

| sarah_vox10_0023 | 75.243 | 71.426 | 36.756 | 23.853 | 0.408 | 75.6 | 71.599 | 44.684 | 17.633 | 0.382 | 75.429 | 71.121 | 107.195 | 47.374 | 0.461 |

| soldier_vox10_0690 | 78.25 | 74.52 | 28.984 | 18.417 | 0.463 | 78.429 | 74.702 | 36.533 | 13.448 | 0.411 | 79.17 | 75.329 | 109.487 | 53.836 | 0.486 |

Cite from:

https://github.com/NJUVISION/PCGCv1

The source code files are provided by Nanjing University Vision Lab. And thanks for the help from SJTU Cooperative Medianet Innovation Center. Please contact us (wangjq@smail.nju.edu.cn) if you have any questions.

contributors

name: Ye Hua

email: yeh@pcl.ac.cn

PCGCv1, point cloud compression, deeplearning, hyper

Text CSV Jupyter Notebook Python Markdown other

Apache-2.0