100 changed files with 15453 additions and 10133 deletions

-

+1 -1.drone.yml

-

+2 -2CONTRIBUTING_CN.md

-

+264 -0ConstraintList.md

-

+266 -0ConstraintList_en.md

-

+197 -0Debugging_and_Tuning.md

-

+34 -72README.md

-

+96 -0README.rst

-

+66 -0README_en.md

-

+1172 -91SupportedList.md

-

+1183 -0SupportedList_en.md

-

+450 -0USER_GUIDE.md

-

BINdoc/pic/MSA_F.png

-

BINdoc/pic/MSA_SIG.png

-

BINdoc/pic/error_log.png

-

BINdoc/pic/time_log.png

-

BINdoc/pic/troubleshooter_result1.png

-

BINdoc/pic/troubleshooter_result2.png

-

BINdoc/pic/troubleshooter_result3.png

-

+0 -5ms_adapter/__init__.py

-

+0 -52ms_adapter/pytorch/__init__.py

-

+0 -28ms_adapter/pytorch/_ref/__init__.py

-

+0 -21ms_adapter/pytorch/common/__init__.py

-

+0 -68ms_adapter/pytorch/common/dtype.py

-

+0 -22ms_adapter/pytorch/cuda/__init__.py

-

+0 -16ms_adapter/pytorch/fft/fft.py

-

+0 -2238ms_adapter/pytorch/functional.py

-

+0 -7ms_adapter/pytorch/nn/__init__.py

-

+0 -1783ms_adapter/pytorch/nn/functional.py

-

+0 -371ms_adapter/pytorch/nn/modules/container.py

-

+0 -668ms_adapter/pytorch/nn/modules/conv.py

-

+0 -288ms_adapter/pytorch/nn/modules/module.py

-

+0 -454ms_adapter/pytorch/nn/modules/pooling.py

-

+0 -104ms_adapter/pytorch/nn/modules/rnn.py

-

+0 -31ms_adapter/pytorch/nn/modules/utils.py

-

+0 -377ms_adapter/pytorch/nn/parameter.py

-

+0 -1871ms_adapter/pytorch/tensor.py

-

+0 -1ms_adapter/pytorch/utils/__init__.py

-

+0 -180ms_adapter/pytorch/utils/data/_utils/collate.py

-

+0 -17ms_adapter/pytorch/utils/data/datapipes/map/__init__.py

-

+0 -181ms_adapter/torchvision/io/video_reader.py

-

+0 -66ms_adapter/torchvision/ops/_register_onnx_ops.py

-

+0 -566ms_adapter/torchvision/utils.py

-

+0 -73ms_adapter/utils.py

-

+6 -0msadapter/__init__.py

-

+11 -0msadapter/package_info.py

-

+54 -0msadapter/pytorch/__init__.py

-

+22 -0msadapter/pytorch/_ref/__init__.py

-

+48 -0msadapter/pytorch/_register/__init__.py

-

+45 -0msadapter/pytorch/_register/getitem_impl.py

-

+162 -0msadapter/pytorch/_register/register_multitype_ops.py

-

+98 -0msadapter/pytorch/_register/register_standard_method.py

-

+254 -0msadapter/pytorch/_register/register_utils.py

-

+217 -0msadapter/pytorch/_register_numpy_primitive.py

-

+0 -0msadapter/pytorch/_six.py

-

+0 -0msadapter/pytorch/_utils.py

-

+31 -0msadapter/pytorch/amp/__init__.py

-

+0 -0msadapter/pytorch/autograd/__init__.py

-

+2 -2msadapter/pytorch/autograd/function.py

-

+2 -2msadapter/pytorch/autograd/variable.py

-

+29 -0msadapter/pytorch/common/__init__.py

-

+40 -9msadapter/pytorch/common/_inner.py

-

+0 -0msadapter/pytorch/common/device.py

-

+129 -0msadapter/pytorch/common/dtype.py

-

+7 -22msadapter/pytorch/conflict_functional.py

-

+36 -0msadapter/pytorch/cuda/__init__.py

-

+2 -1msadapter/pytorch/fft/__init__.py

-

+18 -0msadapter/pytorch/fft/fft.py

-

+2993 -0msadapter/pytorch/functional.py

-

+104 -0msadapter/pytorch/hub.py

-

+31 -0msadapter/pytorch/linalg/__init__.py

-

+230 -0msadapter/pytorch/linalg/linalg.py

-

+8 -0msadapter/pytorch/nn/__init__.py

-

+2605 -0msadapter/pytorch/nn/functional.py

-

+51 -30msadapter/pytorch/nn/init.py

-

+38 -15msadapter/pytorch/nn/modules/__init__.py

-

+226 -144msadapter/pytorch/nn/modules/activation.py

-

+198 -0msadapter/pytorch/nn/modules/adaptive.py

-

+26 -97msadapter/pytorch/nn/modules/batchnorm.py

-

+23 -0msadapter/pytorch/nn/modules/channelshuffle.py

-

+1015 -0msadapter/pytorch/nn/modules/container.py

-

+601 -0msadapter/pytorch/nn/modules/conv.py

-

+1 -1msadapter/pytorch/nn/modules/distance.py

-

+25 -74msadapter/pytorch/nn/modules/dropout.py

-

+1 -1msadapter/pytorch/nn/modules/flatten.py

-

+42 -0msadapter/pytorch/nn/modules/fold.py

-

+81 -0msadapter/pytorch/nn/modules/instancenorm.py

-

+12 -13msadapter/pytorch/nn/modules/linear.py

-

+90 -17msadapter/pytorch/nn/modules/loss.py

-

+644 -0msadapter/pytorch/nn/modules/module.py

-

+8 -8msadapter/pytorch/nn/modules/normalization.py

-

+64 -27msadapter/pytorch/nn/modules/padding.py

-

+26 -0msadapter/pytorch/nn/modules/pixelshuffle.py

-

+202 -0msadapter/pytorch/nn/modules/pooling.py

-

+504 -0msadapter/pytorch/nn/modules/rnn.py

-

+8 -12msadapter/pytorch/nn/modules/sparse.py

-

+288 -0msadapter/pytorch/nn/modules/transformer.py

-

+2 -2msadapter/pytorch/nn/modules/unpooling.py

-

+4 -2msadapter/pytorch/nn/modules/upsampling.py

-

+126 -0msadapter/pytorch/nn/modules/utils.py

-

+232 -0msadapter/pytorch/nn/parameter.py

+ 1

- 1

.drone.yml

View File

| @@ -11,7 +11,7 @@ trigger: | |||

| steps: | |||

| - name: Code Inspection | |||

| image: swr.cn-north-4.myhuaweicloud.com/hanjr/msadapter:2.0.0.dev20221113_torch1.12.1 | |||

| image: swr.cn-north-4.myhuaweicloud.com/hanjr/msadapter:mindspore2.0.0_torch1.12.1 | |||

| commands: | |||

| - sh run.sh | |||

+ 2

- 2

CONTRIBUTING_CN.md

View File

| @@ -103,8 +103,8 @@ class Linear(Module): | |||

| ``` | |||

| #!/usr/bin/env python | |||

| # -*- coding: utf-8 -*- | |||

| from ms_adapter.pytorch.nn import Module, Linear, Identity, Bilinear | |||

| from ms_adapter.pytorch import tensor | |||

| from msadapter.pytorch.nn import Module, Linear, Identity, Bilinear | |||

| from msadapter.pytorch import tensor | |||

| from mindspore import context | |||

| import numpy as np | |||

| import mindspore as ms | |||

+ 264

- 0

ConstraintList.md

View File

| @@ -0,0 +1,264 @@ | |||

| 简体中文 | [English](ConstraintList_en.md) | |||

| - [接口约束列表](#jump1) | |||

| - [Torch](#jump2) | |||

| - [Tensor](#jump3) | |||

| - [Torch.nn](#jump4) | |||

| - [nn.functional](#jump5) | |||

| - [torch.linalg](#jump6) | |||

| ## <span id="jump1">接口约束列表</span> | |||

| ### <span id="jump2">Torch</span> | |||

| | MSAdapter接口 | 约束条件 | | |||

| | --------------- | -------------- | | |||

| | torch.frombuffer | require_grad暂不支持 | | |||

| | torch.multinomial | 暂不支持传入Generator | | |||

| | torch.randint | 暂不支持传入Generator | | |||

| | torch.randperm |暂不支持传入Generator | | |||

| | torch.imag | 暂不支持图模式 | | |||

| | torch.max | 不支持other,不支持图模式 | | |||

| | torch.sum | 暂不支持图模式 | | |||

| | torch.lu | 暂不支持图模式, `get_infos=True`场景下,暂不支持错误扫描; 暂不支持`pivot=False`入参, 仅支持二维方阵输入,不支持(*,M,N)形式输入 | | |||

| | torch.lu_solve | 暂不支持图模式, 入参`left=False`暂不支持,入参`LU`仅支持二维方阵输入,不支持三维输入 | | |||

| | torch.lstsq | 暂时不支持返回第二个参数QR,暂不支持图模式,反向梯度暂不支持 | | |||

| | torch.svd | Ascend上暂不支持图模式,Ascend上反向梯度暂不支持 | | |||

| | torch.nextafter | CPU上暂不支持float32输入 | | |||

| | torch.matrix_power | GPU上暂不支持参数`n`小于0 | | |||

| | torch.i0 | Ascend上暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.index_add | 暂不支持二维以上的`input`或`dim`>=1,暂不支持图模式 | | |||

| | torch.index_copy | 暂不支持二维以上的`input`或`dim`>=1,暂不支持图模式 | | |||

| | torch.scatter_reduce | 暂不支持`reduce`="mean" | | |||

| | torch.histogramdd | 暂不支持float64类型输入 | | |||

| | torch.asarray | 暂不支持输入`device`、 `copy`和`requires_grad`参数配置功能 | | |||

| | torch.complex | 暂不支持float16类型输入 | | |||

| | torch.fmin | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.kron | 暂不支持入参是不同复数类型 | | |||

| | torch.sort | 暂不支持`stable`入参 | | |||

| | torch.float_power | 不支持复数输入 | | |||

| | torch.add |暂不支持当两个输入都为bool类型时, 返回bool类型 | | |||

| | torch.polygamma | 当入参`n`为0时,结果可能不正确 | | |||

| | torch.matmul | GPU上暂不支持int类型输入 | | |||

| | torch.geqrf | 暂不支持大于2维的输入 | | |||

| | torch.repeat_interleave | 暂不支持`output_size`入参 | | |||

| | torch.index_reduce | 暂不支持`reduce`="mean" | | |||

| | torch.view_as_complex | 输出张量暂时以数据拷贝方式返回,无法提供视图模式 | | |||

| | torch.pad | 当`padding_mode`为'reflect'时,不支持5维的输入 | | |||

| | torch.corrcoef | 暂不支持复数类型入参 | | |||

| | torch.symeig | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.fmax | GPU和Ascend上暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.fft | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.rfft | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | torch.norm | 1.当`p`为0/1/-1/-2时,矩阵范数不支持;2.不支持`p`为inf/-inf/0/1/-1/2/-2之外的int/float类型。| | |||

| | torch.poisson | Ascend上暂不支持反向梯度 | | |||

| | torch.xlogy | Ascend 上当前只支持float16 和float32输入 | | |||

| | torch.digamma | Ascend上仅支持float16和float32类型入参 | | |||

| | torch.lgamma | Ascend上仅支持float16和float32类型入参 | | |||

| ### <span id="jump3">Tensor</span> | |||

| | MSAdapter接口 | 约束条件 | | |||

| | --------------- | -------------- | | |||

| | Tensor.bool | 不支持memory_format参数 | | |||

| | Tensor.expand | 类型限制,只支持Tensor[Float16], Tensor[Float32], Tensor[Int32], Tensor[Int8], Tensor[UInt8] | | |||

| | Tensor.float | 不支持memory_format | | |||

| | Tensor.scatter | 不支持reduce='mutiply', Ascend不支持reduce='add', 不支持indices.shape != src.shape | | |||

| | Tensor.std | 不支持复数和float64输入 | | |||

| | Tensor.xlogy | Ascend 上当前只支持float16 和float32输入 | | |||

| | Tensor.abs_ | 暂不支持图模式 | | |||

| | Tensor.absolute_ | 暂不支持图模式 | | |||

| | Tensor.acos_ | 暂不支持图模式 | | |||

| | Tensor.arccos_ | 暂不支持图模式 | | |||

| | Tensor.addr_ | 暂不支持图模式 | | |||

| | Tensor.add_ | 暂不支持图模式 | | |||

| | Tensor.addbmm_ | 暂不支持图模式 | | |||

| | Tensor.addcdiv_ | 暂不支持图模式 | | |||

| | Tensor.addcmul_ | 暂不支持图模式 | | |||

| | Tensor.addmm_ | 暂不支持图模式 | | |||

| | Tensor.addmv_ | 暂不支持图模式 | | |||

| | Tensor.addr_ | 暂不支持图模式 | | |||

| | Tensor.asin_ | 暂不支持图模式 | | |||

| | Tensor.arcsin_ | 暂不支持图模式 | | |||

| | Tensor.atan_ | 暂不支持图模式 | | |||

| | Tensor.arctan_ | 暂不支持图模式 | | |||

| | Tensor.atan2_ | 暂不支持图模式 | | |||

| | Tensor.arctan2_ | 暂不支持图模式 | | |||

| | Tensor.baddbmm_ | 暂不支持图模式 | | |||

| | Tensor.bitwise_not_ | 暂不支持图模式 | | |||

| | Tensor.bitwise_and_ | 暂不支持图模式 | | |||

| | Tensor.bitwise_or_ | 暂不支持图模式 | | |||

| | Tensor.bitwise_xor_ | 暂不支持图模式 | | |||

| | Tensor.clamp_ | 暂不支持图模式 | | |||

| | Tensor.clip_ | 暂不支持图模式 | | |||

| | Tensor.copy_ | 暂不支持图模式 | | |||

| | Tensor.copysign_ | 暂不支持图模式 | | |||

| | Tensor.acosh_ | 暂不支持图模式 | | |||

| | Tensor.arccosh_ | 暂不支持图模式 | | |||

| | Tensor.cumprod_ | 暂不支持图模式 | | |||

| | Tensor.div_ | 暂不支持图模式 | | |||

| | Tensor.divide_ | 暂不支持图模式 | | |||

| | Tensor.eq_ | 暂不支持图模式 | | |||

| | Tensor.expm1_ | 暂不支持图模式 | | |||

| | Tensor.fix_ | 暂不支持图模式 | | |||

| | Tensor.fill_ | 暂不支持图模式 | | |||

| | Tensor.float_power_ | 暂不支持图模式 | | |||

| | Tensor.floor_ | 暂不支持图模式 | | |||

| | Tensor.fmod_ | 暂不支持图模式 | | |||

| | Tensor.ge_ | 暂不支持图模式 | | |||

| | Tensor.greater_equal_ | 暂不支持图模式 | | |||

| | Tensor.gt_ | 暂不支持图模式 | | |||

| | Tensor.greater_ | 暂不支持图模式 | | |||

| | Tensor.hypot_ | 暂不支持图模式 | | |||

| | Tensor.le_ | 暂不支持图模式 | | |||

| | Tensor.less_equal_ | 暂不支持图模式 | | |||

| | Tensor.lgamma_ | 暂不支持图模式 | | |||

| | Tensor.logical_xor_ | 暂不支持图模式 | | |||

| | Tensor.lt_ | 暂不支持图模式 | | |||

| | Tensor.less_ | 暂不支持图模式 | | |||

| | Tensor.lu | 暂不支持图模式,入参`get_infos=True`时暂不支持扫描错误信息, 暂不支持`pivot=False`,仅支持二维方阵输入,不支持(*,M,N)形式输入 | | |||

| | Tensor.lu_solve | 暂不支持图模式,入参`left=False`暂不支持,入参`LU`仅支持二维方阵输入,不支持三维输入 | | |||

| | Tensor.lstsq | 暂时不支持返回第二个参数QR, 暂不支持图模式,反向梯度暂不支持 | | |||

| | Tensor.mul_ | 暂不支持图模式 | | |||

| | Tensor.multiply_ | 暂不支持图模式 | | |||

| | Tensor.mvlgamma_ | 暂不支持图模式 | | |||

| | Tensor.ne_ | 暂不支持图模式 | | |||

| | Tensor.not_equal_ | 暂不支持图模式 | | |||

| | Tensor.neg_ | 暂不支持图模式 | | |||

| | Tensor.negative_ | 暂不支持图模式 | | |||

| | Tensor.pow_ | 暂不支持图模式 | | |||

| | Tensor.reciprocal_ | 暂不支持图模式 | | |||

| | Tensor.renorm_ | 暂不支持图模式 | | |||

| | Tensor.resize_ | 暂不支持图模式 | | |||

| | Tensor.round_ | 暂不支持图模式 | | |||

| | Tensor.sigmoid_ | 暂不支持图模式 | | |||

| | Tensor.sign_ | 暂不支持图模式 | | |||

| | Tensor.sin_ | 暂不支持图模式 | | |||

| | Tensor.sinc_ | 暂不支持图模式 | | |||

| | Tensor.sinh_ | 暂不支持图模式 | | |||

| | Tensor.asinh_ | 暂不支持图模式 | | |||

| | Tensor.square_ | 暂不支持图模式 | | |||

| | Tensor.sqrt_ | 暂不支持图模式 | | |||

| | Tensor.squeeze_ | 暂不支持图模式 | | |||

| | Tensor.sub_ | 暂不支持图模式 | | |||

| | Tensor.tan_ | 暂不支持图模式 | | |||

| | Tensor.tanh_ | 暂不支持图模式 | | |||

| | Tensor.atanh_ | 暂不支持图模式 | | |||

| | Tensor.arctanh_ | 暂不支持图模式 | | |||

| | Tensor.transpose_ | 暂不支持图模式 | | |||

| | Tensor.trunc_ | 暂不支持图模式 | | |||

| | Tensor.unsqueeze_ | 暂不支持图模式 | | |||

| | Tensor.zero_ | 暂不支持图模式 | | |||

| | Tensor.svd | Ascend上暂不支持图模式,Ascend上反向梯度暂不支持 | | |||

| | Tensor.nextafter | CPU上暂不支持float32输入 | | |||

| | Tensor.matrix_power | GPU上暂不支持参数`n`小于0 | | |||

| | Tensor.i0 | Ascend上暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.index_add | 暂不支持二维以上的`input`或`dim`为1 | | |||

| | Tensor.nextafter_ | CPU上暂不支持float32输入 | | |||

| | Tensor.fmin | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.imag | 暂不支持图模式 | | |||

| | Tensor.scatter_reduce | 暂不支持`reduce`="mean" | | |||

| | Tensor.scatter_reduce_ | 暂不支持`reduce`="mean"和图模式 | | |||

| | Tensor.neg | 暂不支持uint32, uint64输入 | | |||

| | Tensor.add | 暂不支持当两个输入都为bool类型时, 返回bool类型 | | |||

| | Tensor.polygamma | 当入参`n`为0时,结果可能不正确 | | |||

| | Tensor.matmul | GPU上暂不支持int类型输入 | | |||

| | Tensor.geqrf | 暂不支持大于2维的输入 | | |||

| | Tensor.repeat_interleave | 暂不支持`output_size`入参 | | |||

| | Tensor.index_reduce | 暂不支持`reduce`="mean" | | |||

| | Tensor.index_reduce_ | 暂不支持`reduce`="mean"和图模式 | | |||

| | Tensor.masked_scatter | 暂不支持`input`广播到`mask`和GPU后端 | | |||

| | Tensor.index_put | Ascend上暂不支持`accumulate`=False | | |||

| | Tensor.index_put_ | Ascend上暂不支持`accumulate`=False,暂不支持图模式 | | |||

| | Tensor.corrcoef | 暂不支持复数类型入参 | | |||

| | Tensor.exponential_ | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.geometric_ | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.log_normal_ | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.symeig | 暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.fmax | GPU和Ascend上暂不支持反向梯度, 暂不支持图模式 | | |||

| | Tensor.norm | 1.当`p`为0/1/-1/-2时,矩阵范数不支持;2.不支持`p`为inf/-inf/0/1/-1/2/-2之外的int/float类型。| | |||

| | Tensor.digamma | Ascend上仅支持float16和float32类型入参 | | |||

| | Tensor.lgamma | Ascend上仅支持float16和float32类型入参 | | |||

| | Tensor.arcsinh_ | 暂不支持图模式 | | |||

| ### <span id="jump4">Torch.nn</span> | |||

| | MSAdapter接口 | 约束条件 | | |||

| | --------------- | -------------- | | |||

| | nn.LPPool1d | Ascend上不支持float64 | | |||

| | nn.LPPool2d | Ascend上不支持float64 | | |||

| | nn.ELU | Alpha仅支持1.0 | | |||

| | nn.Hardshrink | 不支持float64 | | |||

| | nn.Hardtanh | 不支持float64 | | |||

| | nn.Hardswish | 不支持float64 | | |||

| | nn.LeakyReLU | 不支持float64 | | |||

| | nn.PReLU | 不支持float64 | | |||

| | nn.ReLU6 | 不支持float64 | | |||

| | nn.RReLU | inplace不支持图模式 | | |||

| | nn.SELU | inplace不支持图模式 | | |||

| | nn.CELU | inplace不支持图模式 | | |||

| | nn.Mish | inplace不支持图模式 | | |||

| | nn.Threshold | inplace不支持图模式 | | |||

| | nn.Softshrink | 不支持float64 | | |||

| | nn.LogSoftmax | 不支持float64,不支持8维及以上 | | |||

| | nn.Linear | device, dtype参数不支持 | | |||

| | nn.UpsamplingNearest2d | 不支持size为none | | |||

| | nn.Conv1d | 1.`padding_mode` 只支持'zeros';2.Ascend上,`groups`只支持1或者与`in_channels`相等 | | |||

| | nn.Conv2d | 1.`padding_mode` 只支持'zeros'; 2.Ascend上,`groups`只支持1或者与`in_channels`相等 | | |||

| | nn.Conv3d | 1.不支持复数;2.`padding_mode`只支持'zeros';3.Ascend上`groups`, `dialtion`参数只支持为1 | | |||

| | nn.ConvTranspose1d | 1.`output_padding`,`output_size`不支持; 2.Ascend上`groups`只支持1或者与`in_channels`相等 | | |||

| | nn.ConvTranspose2d | 1.`output_padding`,`output_size`不支持; 2.Ascend上`groups`只支持1或者与`in_channels`相等 | | |||

| | nn.AdaptiveLogSoftmaxWithLoss | 不支持图模式 | | |||

| | nn.LSTM | 当前`proj_size`不支持 | | |||

| | nn.ReflectionPad1d |`padding`参数不支持负数取值 | | |||

| | nn.ReflectionPad2d | `padding`参数不支持负数取值 | | |||

| | nn.ReflectionPad3d | `padding`参数不支持负数取值 | | |||

| | nn.Transformer | 不支持等号赋值关键字参数。不支持空tensor输入 | | |||

| | nn.TransformerEncoder | 不支持等号赋值关键字参数。不支持空tensor输入 | | |||

| | nn.TransformerDecoder | 不支持等号赋值关键字参数。不支持空tensor输入 | | |||

| | nn.TransformerEncoderLayer | 不支持等号赋值关键字参数。不支持空tensor输入 | | |||

| | nn.TransformerDecoderLayer | 不支持等号赋值关键字参数。不支持空tensor输入 | | |||

| | nn.AdaptiveMaxPool1d | Ascend上不支持`return_indices` | | |||

| | nn.AdaptiveMaxPool2d | Ascend上不支持`return_indices` | | |||

| | nn.Embedding | 1.`scale_grad_by_freq`, `sparse`不支持; 2.`norm_type`只能为2 | | |||

| ### <span id="jump5">nn.functional</span> | |||

| | MSAdapter接口 | 约束条件 | | |||

| | --------------- | -------------- | | |||

| | functional.lp_pool1d | Ascend上不支持float64 | | |||

| | functional.lp_pool2d | Ascend上不支持float64 | | |||

| | functional.prelu | 不支持float64 | | |||

| | functional.rrelu | 1.inplace不支持图模式; 2.`training`入参不支持 | | |||

| | functional.softshrink | 不支持float64 | | |||

| | functional.log_softmax | 不支持float64 | | |||

| | functional.dropout1d | inplace不支持图模式 | | |||

| | functional.dropout2d | inplace不支持图模式 | | |||

| | functional.dropout3d | inplace不支持图模式 | | |||

| | functional.conv3d | Ascend上`groups`, `dialtion`参数只支持1 | | |||

| | functional.upsample_bilinear | 输入张量必须是4维 | | |||

| | functional.interpolate | `recompute_scale_factor` 及 `antialias` 入参不支持。 只支持以下三种模式, 其中,'nearest'只支持4D或5D输入, 'bilinear'只支持4D输入, 'linear'只支持3D输入。| | |||

| | functional.conv1d | Ascend上,`groups`只支持1或者与`input`的通道数相等 | | |||

| | functional.conv2d | Ascend上,`groups`只支持1或者与`input`的通道数相等 | | |||

| | functional.conv_transpose1d | 1.`output_padding`暂不支持; 2.Ascend上`groups`只支持1或者与`input`的通道数相等 | | |||

| | functional.conv_transpose2d | 1.`output_padding`暂不支持; 2.Ascend上`groups`只支持1或者与`input`的通道数相等 | | |||

| | functional.adaptive_max_pool1d | Ascend上不支持`return_indices` | | |||

| | functional.adaptive_max_pool2d | Ascend上不支持`return_indices` | | |||

| | functional.instance_norm | 图模式下,训练模式时, 暂不支持`running_mean`和`running_var` | | |||

| | functional.batch_norm | 图模式下,训练模式时, 暂不支持`running_mean`及`running_var` | | |||

| | functional.embedding | 1.`scale_grad_by_freq`, `sparse`不支持; 2.`norm_type`只能为2 | | |||

| ### <span id="jump6">torch.linalg</span> | |||

| | MSAdapter接口 | 约束条件 | | |||

| | --------------- | -------------- | | |||

| | lu | 暂不支持图模式,暂不支持入参`pivot=False`, 仅支持二维方阵输入,不支持(*,M,N)形式输入 | | |||

| | lu_solve | 暂不支持图模式,入参`left=False`不支持,入参`LU`不支持三维输入 | | |||

| | lu_factor | 暂不支持图模式,仅支持二维方阵输入,不支持(*,M,N)形式输入 | | |||

| | lu_factor_ex | 暂不支持图模式,入参`get_infos=True`时暂不支持扫描错误信息, 暂不支持`pivot=False`,仅支持二维方阵输入,不支持(*,M,N)形式输入 | | |||

| | lstsq| 暂不支持图模式,反向梯度暂不支持 | | |||

| | eigvals | 暂不支持图模式,反向梯度暂不支持 | | |||

| | svd | `driver`参数只支持None, Ascend上不支持反向梯度, Ascend上暂不支持图模式 | | |||

| | svdvals | `driver`参数只支持None,Ascend上不支持反向梯度, Ascend上暂不支持图模式 | | |||

| | norm | 暂不支持复数输入, `ord`参数暂不支持浮点型输入, Ascend上暂不支持ord为nuc模式、float(`inf`)模式和整形数输入 | | |||

| | vector_norm | 暂不支持复数输入, `ord`参数暂不支持浮点型输入 | | |||

| | matrix_power | GPU上暂不支持参数`n`小于0 | | |||

| | eigvalsh | 反向梯度暂不支持 | | |||

| | eigh | 暂不支持图模式,反向梯度暂不支持 | | |||

| | solve | 反向梯度暂不支持 | | |||

+ 266

- 0

ConstraintList_en.md

View File

| @@ -0,0 +1,266 @@ | |||

| English | [简体中文](ConstraintList.md) | |||

| - [API Constraints List](#jump1) | |||

| - [Torch](#jump2) | |||

| - [Tensor](#jump3) | |||

| - [Torch.nn](#jump4) | |||

| - [nn.functional](#jump5) | |||

| - [torch.linalg](#jump6) | |||

| ## <span id="jump1">API Constraints List</span> | |||

| ### <span id="jump2">Torch</span> | |||

| | MSAdapter APIs | Constraint conditions | | |||

| | --------------- | -------------- | | |||

| | torch.frombuffer | Currently not support require_grad | | |||

| | torch.multinomial | Currently not support input Generator | | |||

| | torch.randint | Currently not support input Generator | | |||

| | torch.randperm | Currently not support input Generator | | |||

| | torch.imag | Currently not support on GRAPH mode | | |||

| | torch.max | Currently not support other, Not support on GRAPH mode | | |||

| | torch.sum | Currently not support on GRAPH mode | | |||

| | torch.lu | Currently not support GRAPH mode, input `get_infos=True` currently cannot scan the error, mindspore not support `pivot=False`,, only support 2-D square matrix as input, not support (*,M,N) shape input | | |||

| | torch.lu_solve | Currently not support GRAPH mode, input `left=False` not support, only support 2-D square matrix as input, not support 3-D input | | |||

| | torch.lstsq | Currently not support return the second result QR, not support on GRAPH mode, not support gradient computation | | |||

| | torch.svd | Currently not support GRAPH mode on Ascend, not support gradient computation on Ascend | | |||

| | torch.nextafter | Currently not support float32 on CPU | | |||

| | torch.matrix_power | Currently not support `n` < 0 on GPU | | |||

| | torch.i0 | Currently not support gradient computation on Ascend, currently not support GRAPH mode on Ascend | | |||

| | torch.index_add | Not support `input` of more than 2-D or `dim` >= 1. Not suppor GRAPH mode | | |||

| | torch.index_copy | Not support `input` of more than 2-D or `dim` >= 1. Not suppor GRAPH mode | | |||

| | torch.scatter_reduce | Currently not support `reduce`="mean" | | |||

| | torch.histogramdd | Currently not support float64 input | | |||

| | torch.asarray | Currently not support input `device`, `copy`, `requires_grad` as configuration | | |||

| | torch.complex | Currently not support float16 input | | |||

| | torch.fmin | Currently not support gradient computation, not support GRAPH mode | | |||

| | torch.kron | Currently not support different complex types for inputs | | |||

| | torch.sort | Currently not support `stable` | | |||

| | torch.float_power | Currently not support complex input | | |||

| | torch.add | Currently not support both bool type input and return bool output | | |||

| | torch.polygamma | When `n` is zero, the result may be wrong | | |||

| | torch.matmul | Currently not support int type input on GPU | | |||

| | torch.geqrf | Currently not support input ndim > 2 | | |||

| | torch.repeat_interleave | Currently not support `output_size` | | |||

| | torch.index_reduce | Currently not support `reduce`="mean" | | |||

| | torch.view_as_complex | Currently the output tensor is provided by data copying instead of a view of shared memory | | |||

| | torch.pad | when `padding_mode` is 'reflect', not support 5D input | | |||

| | torch.corrcoef | Currently not support complex inputs | | |||

| | torch.symeig | Currently not support gradient computation, not support GRAPH mode | | |||

| | torch.fmax | Currently not support gradient computation on GPU and Ascend, not support GRAPH mode on GPU and Ascend | | |||

| | torch.fft | Currently not support gradient computation, not support GRAPH mode | | |||

| | torch.rfft | Currently not support gradient computation, not support GRAPH mode | | |||

| | torch.poisson| Currently not support gradient computation on Ascend | | |||

| | torch.norm | 1.when `p` in 0/1/-1/-2,matrix-norm not support;2.not support `p` in int/float type beside inf/-inf/0/1/-1/2/-2 | | |||

| | torch.xlogy | Currently only support float16 and float32 on Ascend | | |||

| | torch.digamma | Currently only support float16 and float32 on Ascend | | |||

| | torch.lgamma | Currently only support float16 and float32 on Ascend | | |||

| ### <span id="jump3">Tensor</span> | |||

| | MSAdapter APIs | Constraint conditions | | |||

| | --------------- | -------------- | | |||

| | Tensor.bool | Not support parameter memory_format| | |||

| | Tensor.expand | Type is constrained, only support Tensor[Float16], Tensor[Float32], Tensor[Int32], Tensor[Int8], Tensor[UInt8] | | |||

| | Tensor.float | Currently not support memory_format | | |||

| | Tensor.scatter | Currently not support reduce='mutiply', AscendNot support reduce='add', Not support indices.shape != src.shape | | |||

| | Tensor.std | Currently not support complex number and float64 input | | |||

| | Tensor.xlogy | Currently only support float16 and float32 on Ascend | | |||

| | Tensor.abs_ | Currently not support on GRAPH mode | | |||

| | Tensor.absolute_ | Currently not support on GRAPH mode | | |||

| | Tensor.acos_ | Currently not support on GRAPH mode | | |||

| | Tensor.arccos_ | Currently not support on GRAPH mode | | |||

| | Tensor.addr_ | Currently not support on GRAPH mode | | |||

| | Tensor.add_ | Currently not support on GRAPH mode | | |||

| | Tensor.addbmm_ | Currently not support on GRAPH mode | | |||

| | Tensor.addcdiv_ | Currently not support on GRAPH mode | | |||

| | Tensor.addcmul_ | Currently not support on GRAPH mode | | |||

| | Tensor.addmm_ | Currently not support on GRAPH mode | | |||

| | Tensor.addmv_ | Currently not support on GRAPH mode | | |||

| | Tensor.addr_ | Currently not support on GRAPH mode | | |||

| | Tensor.asin_ | Currently not support on GRAPH mode | | |||

| | Tensor.arcsin_ | Currently not support on GRAPH mode | | |||

| | Tensor.atan_ | Currently not support on GRAPH mode | | |||

| | Tensor.arctan_ | Currently not support on GRAPH mode | | |||

| | Tensor.atan2_ | Currently not support on GRAPH mode | | |||

| | Tensor.arctan2_ | Currently not support on GRAPH mode | | |||

| | Tensor.baddbmm_ | Currently not support on GRAPH mode | | |||

| | Tensor.bitwise_not_ | Currently not support on GRAPH mode | | |||

| | Tensor.bitwise_and_ | Currently not support on GRAPH mode | | |||

| | Tensor.bitwise_or_ | Currently not support on GRAPH mode | | |||

| | Tensor.bitwise_xor_ | Currently not support on GRAPH mode | | |||

| | Tensor.clamp_ | Currently not support on GRAPH mode | | |||

| | Tensor.clip_ | Currently not support on GRAPH mode | | |||

| | Tensor.copy_ | Currently not support on GRAPH mode | | |||

| | Tensor.copysign_ | Currently not support on GRAPH mode | | |||

| | Tensor.acosh_ | Currently not support on GRAPH mode | | |||

| | Tensor.arccosh_ | Currently not support on GRAPH mode | | |||

| | Tensor.cumprod_ | Currently not support on GRAPH mode | | |||

| | Tensor.div_ | Currently not support on GRAPH mode | | |||

| | Tensor.divide_ | Currently not support on GRAPH mode | | |||

| | Tensor.eq_ | Currently not support on GRAPH mode | | |||

| | Tensor.expm1_ | Currently not support on GRAPH mode | | |||

| | Tensor.fix_ | Currently not support on GRAPH mode | | |||

| | Tensor.fill_ | Currently not support on GRAPH mode | | |||

| | Tensor.float_power_ | Currently not support on GRAPH mode | | |||

| | Tensor.floor_ | Currently not support on GRAPH mode | | |||

| | Tensor.fmod_ | Currently not support on GRAPH mode | | |||

| | Tensor.ge_ | Currently not support on GRAPH mode | | |||

| | Tensor.greater_equal_ | Currently not support on GRAPH mode | | |||

| | Tensor.gt_ | Currently not support on GRAPH mode | | |||

| | Tensor.greater_ | Currently not support on GRAPH mode | | |||

| | Tensor.hypot_ | Currently not support on GRAPH mode | | |||

| | Tensor.le_ | Currently not support on GRAPH mode | | |||

| | Tensor.less_equal_ | Currently not support on GRAPH mode | | |||

| | Tensor.lgamma_ | Currently not support on GRAPH mode | | |||

| | Tensor.logical_xor_ | Currently not support on GRAPH mode | | |||

| | Tensor.lt_ | Currently not support on GRAPH mode | | |||

| | Tensor.less_ | Currently not support on GRAPH mode | | |||

| | Tensor.lu | Currently not support GRAPH mode, input `get_infos=True` currently cannot scan the error, not support `pivot=False`, only support 2-D square matrix as input, not support (*,M,N) shape input | | |||

| | Tensor.lu_solve | Currently not support GRAPH mode, input `left=False` not support, only support 2-D square matrix as input, not support 3-D input | | |||

| | Tensor.lstsq | Not support return the second result QR, not support on GRAPH mode, not support gradient computation | | |||

| | Tensor.mul_ | Currently not support on GRAPH mode | | |||

| | Tensor.multiply_ | Currently not support on GRAPH mode | | |||

| | Tensor.mvlgamma_ | Currently not support on GRAPH mode | | |||

| | Tensor.ne_ | Currently not support on GRAPH mode | | |||

| | Tensor.not_equal_ | Currently not support on GRAPH mode | | |||

| | Tensor.neg_ | Currently not support on GRAPH mode | | |||

| | Tensor.negative_ | Currently not support on GRAPH mode | | |||

| | Tensor.pow_ | Currently not support on GRAPH mode | | |||

| | Tensor.reciprocal_ | Currently not support on GRAPH mode | | |||

| | Tensor.renorm_ | Currently not support on GRAPH mode | | |||

| | Tensor.resize_ | Currently not support on GRAPH mode | | |||

| | Tensor.round_ | Currently not support on GRAPH mode | | |||

| | Tensor.sigmoid_ | Currently not support on GRAPH mode | | |||

| | Tensor.sign_ | Currently not support on GRAPH mode | | |||

| | Tensor.sin_ | Currently not support on GRAPH mode | | |||

| | Tensor.sinc_ | Currently not support on GRAPH mode | | |||

| | Tensor.sinh_ | Currently not support on GRAPH mode | | |||

| | Tensor.asinh_ | Currently not support on GRAPH mode | | |||

| | Tensor.square_ | Currently not support on GRAPH mode | | |||

| | Tensor.sqrt_ | Currently not support on GRAPH mode | | |||

| | Tensor.squeeze_ | Currently not support on GRAPH mode | | |||

| | Tensor.sub_ | Currently not support on GRAPH mode | | |||

| | Tensor.tan_ | Currently not support on GRAPH mode | | |||

| | Tensor.tanh_ | Currently not support on GRAPH mode | | |||

| | Tensor.atanh_ | Currently not support on GRAPH mode | | |||

| | Tensor.arctanh_ | Currently not support on GRAPH mode | | |||

| | Tensor.transpose_ | Currently not support on GRAPH mode | | |||

| | Tensor.trunc_ | Currently not support on GRAPH mode | | |||

| | Tensor.unsqueeze_ | Currently not support on GRAPH mode | | |||

| | Tensor.zero_ | Currently not support on GRAPH mode | | |||

| | Tensor.svd | Currently not support GRAPH mode on Ascend, not support gradient computation on Ascend | | |||

| | Tensor.nextafter | Currently not support float32 on CPU | | |||

| | Tensor.matrix_power | Currently not support `n` < 0 on GPU | | |||

| | Tensor.i0 | Currently not support gradient computation on Ascend, currently not support GRAPH mode on Ascend | | |||

| | Tensor.index_add | Not support `input` of more than 2-D or `dim` >= 1 | | |||

| | Tensor.nextafter_ | Currently not support float32 on CPU | | |||

| | Tensor.fmin | Currently not support gradient computation, not support GRAPH mode | | |||

| | Tensor.imag | Currently not support on GRAPH mode | | |||

| | Tensor.scatter_reduce | Currently not support `reduce`="mean" | | |||

| | Tensor.scatter_reduce_ | Currently not support `reduce`="mean" and GRAPH mode | | |||

| | Tensor.neg | Currently not support uint32, uint64 | | |||

| | Tensor.add | Currently not support both bool type input and return bool output | | |||

| | Tensor.polygamma | When `n` is zero, the result may be wrong | | |||

| | Tensor.matmul | Currently not support int type input on GPU | | |||

| | Tensor.geqrf | Currently not support input ndim > 2 | | |||

| | Tensor.repeat_interleave | Currently not support `output_size` | | |||

| | Tensor.index_reduce | Currently not support `reduce`="mean" | | |||

| | Tensor.index_reduce_ | Currently not support `reduce`="mean" and GRAPH mode | | |||

| | Tensor.masked_scatter | Currently not support on GPU, or `input` to be broadcasted to the shape of `mask` | | |||

| | Tensor.index_put | Currently not support `accumulate`=False on Ascend | | |||

| | Tensor.index_put_ | Currently not support `accumulate`=False on Ascend or on GRAPH mode | | |||

| | Tensor.corrcoef | Currently not support complex inputs | | |||

| | Tensor.exponential_ | Currently not support gradient computation, not support GRAPH mode | | |||

| | Tensor.geometric_ | Currently not support gradient computation, not support GRAPH mode | | |||

| | Tensor.log_normal_ | Currently not support gradient computation, not support GRAPH mode | | |||

| | Tensor.symeig | Currently not support gradient computation, not support GRAPH mode | | |||

| | Tensor.fmax | Currently not support gradient computation on GPU and Ascend, not support GRAPH mode on GPU and Ascend | | |||

| | Tensor.norm | 1.when `p` in 0/1/-1/-2,matrix-norm not support;2.not support `p` in int/float type beside inf/-inf/0/1/-1/2/-2 | | |||

| | Tensor.digamma | Currently only support float16 and float32 on Ascend | | |||

| | Tensor.lgamma | Currently only support float16 and float32 on Ascend | | |||

| | Tensor.arcsinh_ | Currently not support on GRAPH mode | | |||

| ### <span id="jump4">Torch.nn</span> | |||

| | MSAdapter APIs | Constraint conditions | | |||

| | --------------- | -------------- | | |||

| | nn.LPPool1d | Not support float64 on Ascend | | |||

| | nn.LPPool2d | Not support float64 on Ascend | | |||

| | nn.ELU | only support Alpha = 1.0 | | |||

| | nn.Hardshrink | Not support float64 | | |||

| | nn.Hardtanh | Not support float64 | | |||

| | nn.Hardswish | Not support float64 | | |||

| | nn.LeakyReLU | Not support float64 | | |||

| | nn.PReLU | Not support float64 | | |||

| | nn.ReLU6 | Not support float64 | | |||

| | nn.RReLU | inplace not support GRAPH mode | | |||

| | nn.SELU | inplace not support GRAPH mode | | |||

| | nn.CELU | inplace not support GRAPH mode | | |||

| | nn.Mish | inplace not support GRAPH mode | | |||

| | nn.Threshold | inplace not support GRAPH mode | | |||

| | nn.Softshrink | Not support float64 | | |||

| | nn.LogSoftmax | Not support float64, Not support 8D and higher dimension | | |||

| | nn.Linear | device, dtype parameter Not support | | |||

| | nn.UpsamplingNearest2d | Not support size=None | | |||

| | nn.Conv1d | 1.`padding_mode` only support 'zeros'; 2.On Ascend, `groups` can only support 1 or equal to `in_channels` | | |||

| | nn.Conv2d | 1.`padding_mode` only support 'zeros'; 2.On Ascend, `groups` can only support 1 or equal to `in_channels` | | |||

| | nn.Conv3d | 1.Not support complex number; 2. `padding_mode` only support 'zeros'; 3.`groups`,`dialtion` only support 1 on Ascend | | |||

| | nn.ConvTranspose1d | 1.`output_padding`,`output_size` not support; 2.On Ascend, `groups` can only support 1 or equal to `in_channels` | | |||

| | nn.ConvTranspose2d | 1.`output_padding`,`output_size` not support. 2.On Ascend, `groups` can only support 1 or equal to `in_channels` | | |||

| | nn.AdaptiveLogSoftmaxWithLoss | Not support GRAPH mode | | |||

| | nn.LSTM | Currently `proj_size` not support | | |||

| | nn.ReflectionPad1d | `padding` not support negative values | | |||

| | nn.ReflectionPad2d | `padding` not support negative values | | |||

| | nn.ReflectionPad3d | `padding` not support negative values | | |||

| | nn.Transformer | Not support assigning values to keyword arguments with `=` operator. Not support input tensors of shape 0 | | |||

| | nn.TransformerEncoder | Not support assigning values to keyword arguments with `=` operator. Not support input tensors of shape 0 | | |||

| | nn.TransformerDecoder | Not support assigning values to keyword arguments with `=` operator. Not support input tensors of shape 0 | | |||

| | nn.TransformerEncoderLayer | Not support assigning values to keyword arguments with `=` operator. Not support input tensors of shape 0 | | |||

| | nn.TransformerDecoderLayer | Not support assigning values to keyword arguments with `=` operator. Not support input tensors of shape 0 | | |||

| | nn.AdaptiveMaxPool1d | `return_indices` not support on Ascend | | |||

| | nn.AdaptiveMaxPool2d | `return_indices` not support on Ascend | | |||

| | nn.Embedding | 1. `scale_grad_by_freq`, `sparse` is not supported; 2. `norm_type` can only be 2 | | |||

| ### <span id="jump5">nn.functional</span> | |||

| | MSAdapter APIs | Constraint conditions | | |||

| | --------------- | -------------- | | |||

| | functional.lp_pool1d | Not support float64 on Ascend | | |||

| | functional.lp_pool2d | Not support float64 on Ascend | | |||

| | functional.prelu | Not support float64 | | |||

| | functional.rrelu | 1.inplace not support GRAPH mode; 2.`training` not support | | |||

| | functional.softshrink | Not support float64 | | |||

| | functional.log_softmax | Not support float64 | | |||

| | functional.dropout1d | inplace not support GRAPH mode | | |||

| | functional.dropout2d | inplace not support GRAPH mode | | |||

| | functional.dropout3d | inplace not support GRAPH mode | | |||

| | functional.conv3d | `groups`,`dialtion` only support 1 on Ascend | | |||

| | functional.upsample_bilinear | Input tensor must be a 4-D tensor | | |||

| | functional.interpolate | `recompute_scale_factor` and `antialias` not support. it only supported the following 3 modes. 'nearest' only support 4D or 5D input, 'bilinear'only support 4D input, 'linear' only support 3D input | | |||

| | functional.conv1d | On Ascend, `groups` can only be 1 or equal to `input` channel | | |||

| | functional.conv2d | On Ascend, `groups` can only be 1 or equal to `input` channel | | |||

| | functional.conv_transpose1d | 1.`output_padding` not support; 2.On Ascend, `groups` can only be 1 or equal to `input` channel | | |||

| | functional.conv_transpose2d | 1.`output_padding` not support; 2.On Ascend, `groups` can only be 1 or equal to `input` channel | | |||

| | functional.adaptive_max_pool1d | `return_indices` not support on Ascend | | |||

| | functional.adaptive_max_pool2d | `return_indices` not support on Ascend | | |||

| | functional.instance_norm | In graph mode, when training mode, `running_mean` and `running_var` are not supported | | |||

| | functional.batch_norm | In graph mode, when training mode, `running_mean` and `running_var` are not supported | | |||

| | functional.embedding | 1. 'scale_grad_by_freq', 'sparse' is not supported; 2. 'norm_type' can only be 2 | | |||

| ### <span id="jump6">torch.linalg</span> | |||

| | MSAdapter APIs | Constraint conditions | | |||

| | --------------- | -------------- | | |||

| | lu | Currently not support on GRAPH mode, not support `pivot=False`, only support 2-D square matrix as input, not support (*,M,N) shape input | | |||

| | lu_solve | Currently not support on GRAPH mode, input`left=False` not support, only support 2-D square matrix as input, not support 3-D input | | |||

| | lu_factor | Currently not support on GRAPH mode, only support 2-D square matrix as input, not support (*,M,N) shape input | | |||

| | lu_factor_ex | Currently not support on GRAPH mode,Input `get_infos=True` currently cannot scan the error, not support `pivot=False`, only support 2-D square matrix as input, not support (*,M,N) shape input | | |||

| | lstsq | Currently not support on GRAPH mode, not support gradient computation | | |||

| | eigvals | Currently not support GRAPH mode, not support gradient computation | | |||

| | svd | `driver` only support None as input, not support gradient computation on Ascend, currently not support GRAPH mode on Ascend | | |||

| | svdvals | `driver` only support None as input, not support gradient computation on Ascend, currently not support on GRAPH mode on Ascend | | |||

| | norm | Currently not support complex input, `ord` not support float input, not support ord is nuclear norm, float('inf') or int on Ascend | | |||

| | vector_norm | Currently not support complex input, `ord` not support float input | | |||

| | matrix_power | Currently not support `n` < 0 on GPU | | |||

| | eigvalsh | not support gradient computation | | |||

| | eigh | Currently not support on GRAPH mode, not support gradient computation | | |||

| | solve | Currently not support gradient computation | | |||

+ 197

- 0

Debugging_and_Tuning.md

View File

| @@ -0,0 +1,197 @@ | |||

| # MSAdapter调试调优指南 | |||

| ## 1.简介 | |||

| MSAdapter是一款将PyTorch训练脚本高效迁移至MindSpore框架执行的实用工具,旨在不改变原生PyTorch用户的编程使用习惯下,使得PyTorch风格代码能在昇腾硬件上获得高效性能。用户只需要将PyTorch源代码中`import torch`替换为`import msadapter.pytorch`,加上少量训练代码适配即可实现模型在昇腾硬件上的训练。 | |||

| 本教材旨在为开发者提供一个简明扼要的精度问题与性能问题初步定位指导。如果您还未完成模型迁移转换,可参考[MSAdapter用户使用指南](USER_GUIDE.md)。 | |||

| ## 2.功能调试 | |||

| #### PyNative模式功能调试 | |||

| 1)当执行出现异常时,您会得到由MindSpore反馈的报错信息,MindSpore报错信息采用Python Traceback处理,包括Python堆栈信息、报错类型与报错描述等信息,对于接口级别的问题,可以根据报错堆栈信息快速定位出问题位置: | |||

|  | |||

| 更多细节请参考[MindSpore功能调试](https://www.mindspore.cn/tutorials/experts/zh-CN/master/debug/function_debug.html)。 | |||

| 2)PyNative模式模式下可以通过添加Print打印信息获取问题接口当前的输入数据具体取值: | |||

| 若输入数据不符合预期,则可能由于前置接口导致问题,可以在关键位置添加断点,逐步缩小范围,直至明确问题接口; | |||

| 如果您在使用过程中遇到框架问题或接口无法对标请通过[ISSUE](https://openi.pcl.ac.cn/OpenI/MSAdapter/issues) 和我们反馈交流。 | |||

| #### Graph模式功能调试 | |||

| 首先推荐您在PyNative模式(即默认模式)下完成功能调试后再尝试Graph模式执行。当Graph模式出现异常时,可结合报错信息和[静态图语法支持](https://www.mindspore.cn/docs/zh-CN/master/note/static_graph_syntax_support.html)文档进行手动适配。同时您将您的受限场景通过[ISSUE](https://openi.pcl.ac.cn/OpenI/MSAdapter/issues) 反馈给我们,我们会优先分析支持。 | |||

| ## 3.精度调优 | |||

| 您可以通过对比迁移后模型和torch原始模型的执行结果,确保迁移模型的功能正确性。 | |||

| #### 方式一:利用TroubleShooter工具进行比较 | |||

| Step1:安装TroubleShooter工具 | |||

| ``` | |||

| pip install troubleshooter -i https://pypi.org/simple | |||

| ``` | |||

| Step2:参考以下用例进行模型推理结果对比 | |||

| ```python | |||

| import sys | |||

| import numpy as np | |||

| import troubleshooter as ts | |||

| sys.path.append("./alexnet_adapter.py") # MSAdapter模型定义文件路经 | |||

| sys.path.append("./alexnet_torch.py") # PyTorch模型定义文件路经 | |||

| from alexnet_adapter import AlexNet as msa_net | |||

| from alexnet_torch import AlexNet as torch_net | |||

| pt_net = torch_net() | |||

| ms_net = msa_net() | |||

| diff_finder = ts.migrator.NetDifferenceFinder(pt_net=pt_net, ms_net=ms_net, auto_conv_ckpt=2) | |||

| # auto_conv_ckpt为2时, PyTorch网络权重会自动加载到MSAdapter网络权重中; | |||

| diff_finder.compare(auto_inputs=(((128, 3, 224, 224), np.float32), )) # 提供输入的shape和type自动构造输入数据,并进行比较输出结果,默认执行model.eval()模式; | |||

| ``` | |||

| 您将获得如下执行结果: | |||

|  | |||

| PyTorch原生模型权重与MSAdapter迁移模型权重映射情况; | |||

|  | |||

| PyTorch原生模型与MSAdapter迁移模型完成权重自动转换后权重值比较结果; | |||

|  | |||

| PyTorch原生模型与MSAdapter迁移模型推理结果比较,如图所示则表示网络推理结果完全一致。 | |||

| 更多使用细节可参考教程[应用场景5:比较MindSpore和PyTorch网络输出是否一致](https://gitee.com/mindspore/toolkits/blob/master/troubleshooter/docs/migrator.md#%E5%BA%94%E7%94%A8%E5%9C%BA%E6%99%AF5%E6%AF%94%E8%BE%83mindspore%E5%92%8Cpytorch%E7%BD%91%E7%BB%9C%E8%BE%93%E5%87%BA%E6%98%AF%E5%90%A6%E4%B8%80%E8%87%B4)。 | |||

| #### 方式二:手动加载pth进行比较 | |||

| 在比较之前,需要保证以下条件的一致性: | |||

| 1)确保网络输入完全一致(可以使用固定的输入数据,也可调用真实数据集); | |||

| 2)确保执行推理模式 | |||

| ``` | |||

| model = LeNet() | |||

| model.eval() | |||

| ``` | |||

| 由于框架随机策略(详情请参考[MindSpore与PyTorch随机数策略的区别](https://www.mindspore.cn/docs/zh-CN/r2.0/migration_guide/typical_api_comparision.html#%E4%B8%8Epytorch%E9%9A%8F%E6%9C%BA%E6%95%B0%E7%AD%96%E7%95%A5%E7%9A%84%E5%8C%BA%E5%88%AB))以及各自内置随机数生成算法的实现存在差异,所以即使用户配置相同的随机种子,两个框架生成的随机数并不一致。同理,带有随机性的接口,如`nn.dropout`,当配置概率不为0或1时,即使输入一致,由于内置随机数逻辑差异,两个框架得到的输出结果并不一致。通过配置网络为推理模式则可排除这方面随机性的影响。 | |||

| 3)确保网络权重的一致性 | |||

| 由于MindSpore随机策略与PyTorch随机策略有所不同,即使网络层初始化策略与算法完全一致,也无法保证权重值一致。此时可以先保存torch的网络权重,再加载至MSAdapter迁移模型的权重中: | |||

| Step1:在torch原始脚本中保存网络权重至本地 | |||

| ```python | |||

| torch.save(net.state_dict(), 'model.pth') | |||

| ``` | |||

| Step2:将torch权重加载至MSAdapter迁移模型中 | |||

| ```python | |||

| net.load_state_dict(torch.load('model.pth',from_torch=True), strict=True) | |||

| ``` | |||

| 在MSAdapter迁移网络脚本中加载Step1保存的pth,同时配置`from_torch=True`,即可将torch的权重加载到迁移模型中,从而保证网络权重的一致性; | |||

| 如果输出误差过大情况,可以在PyNative模式下基于关键位置添加断点,逐步缩小范围,直至明确误差是否合理。 | |||

| ## 4.性能调优 | |||

| 本章节从单卡的性能调优指导入手,帮助用户快速找到单卡训练过程中的性能瓶颈点。多卡场景亦可采用类似手段进行分析。 | |||

| 注:由于首步执行可能存在设备预热/初始化等耗时,下述内容均排除首步执行,推荐观察训练趋于稳定时的现象。 | |||

| 通常训练过程中各个迭代的耗时可拆分为数据预处理部分耗时和网络执行更新部分耗时。可以分别进行耗时统计,明确性能瓶颈发生在哪个阶段,以常见的函数式训练写法为例: | |||

| ```python | |||

| import time | |||

| ... | |||

| train_data = DataLoader(train_set, batch_size=128, shuffle=True, num_workers=2, drop_last=True) | |||

| ... | |||

| # 数据迭代训练 | |||

| for i in range(epochs): | |||

| train_time = time.time() | |||

| for X, y in train_data: | |||

| X, y = X.to(config_args.device), y.to(config_args.device) | |||

| date_time = time.time() | |||

| print("Data Time: ", date_time - train_time, flush=True) # 数据预处理部分耗时 | |||

| res = train_step(X, y) | |||

| print("------>epoch:{}, loss:{:.6f}".format(i, res.asnumpy())) | |||

| train_time = time.time() | |||

| print("Train Time: ", train_time - date_time, flush=True) # 网络执行更新部分耗时 | |||

| ``` | |||

| 一般情况下,Data Time基本可忽略不计,而Train Time基本等价于每迭代的总耗时。 | |||

| #### 数据处理性能调优 | |||

| 1.启用多进程数据加载 | |||

| 如果出现数据耗时过大的情况,请先确认是否合理配置DataLoader中的`num_workers`属性。`num_workers`表示采用多进程并行方式执行数据加载时的进程数,`num_workers`取值越大表示并行程度越高,但由于并行进程会开辟额外存储空间,以及进程数过多可能加剧进程间通讯耗时,不推荐配置过大,按需配置即可。推荐将`num_workers`配置为单次网络训练耗时与单次数据预处理耗时的差异倍数向上取整的取值,例如,网络执行单次耗时为10 s/step,数据预处理单次耗时为20 s/step,则配置`num_workers=2`可使得数据处理耗时基本可被完全隐藏。 | |||

| 2.优化数据预处理操作 | |||

| 如果依照上述方法预计的`num_workers`取值大于16,可以着重分析数据预处理耗时,性能瓶颈可能出现在预处理操作中。如自定义的collate_fn函数较为耗时等。 | |||

| #### 网络执行性能调优 | |||

| 本章节只涉及PyNative模式下分析网络API级别耗时。Graph模式为整图下沉执行,耗时主要集中于算子执行,可直接参考[算子执行性能调优](#jumpch1)进行分析。 | |||

| 1.动态图模式下可以通过开启同步结合打点计时分析性能瓶颈 | |||

| ```python | |||

| ms.set_context(pynative_synchronize=True) | |||

| ``` | |||

| 注意:若未开启同步,python侧计时可能不能准确反映真实执行耗时。同步可能导致网络执行耗时轻微增大,性能调试结束后请关闭同步后训练网络。 | |||

| 2.结合 cProfile 工具分析主要耗时接口 | |||

| ```python | |||

| import cProfile, pstats, io | |||

| from pstats import SortKey | |||

| pr = cProfile.Profile() | |||

| pr.enable() | |||

| ... | |||

| 训练代码 | |||

| ... | |||

| pr.disable() | |||

| s = io.StringIO() | |||

| ps = pstats.Stats(pr, stream=s).sort_stats('cumtime') | |||

| ps.print_stats() | |||

| with open('time_log.txt', 'w+') as f: | |||

| f.write(s.getvalue()) | |||

| ``` | |||

| 其中`sort_stats`配置为`cumtime`表示依照接口耗时(包含该接口内部调用其他接口的总耗时)排序,若配置为`tottime`则表示依照接口耗时(排除接口内部调用其他接口的耗时)排序。 | |||

|  | |||

| 执行后您将得到如图所示的统计文件,我们主要关注msadapter目录下具体接口的耗时,以alexnet为例,conv2d为耗时占比最高的接口。 | |||

| #### <span id="jumpch1">算子执行性能调优</span> | |||

| [MindSpore Insight](https://mindspore.cn/mindinsight/docs/zh-CN/r2.0/performance_tuning_guide.html)是MindSpore原生框架提供的性能分析工具,从单机和集群的角度分别提供了多项指标,用于帮助用户进行性能调优。利用该工具用户可观察到硬件侧算子的执行耗时,昇腾环境可参考[性能调试(Ascend)](https://www.mindspore.cn/mindinsight/docs/zh-CN/r2.0/performance_profiling_ascend.html),GPU环境可参考[性能调试(GPU)](https://www.mindspore.cn/mindinsight/docs/zh-CN/r2.0/performance_profiling_gpu.html)。 | |||

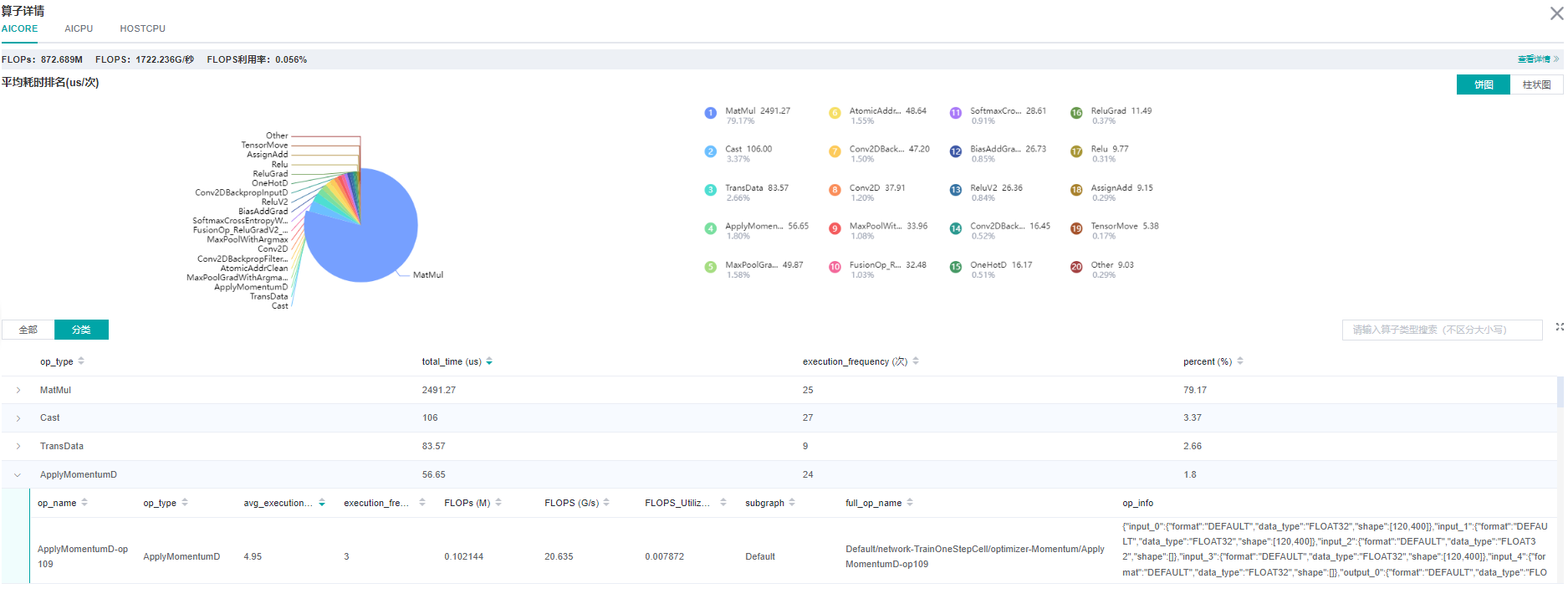

|  | |||

| 最终您将得到如图所示的算子性能分析看板,通过该看板可以明确算子总耗时/算子平均单次耗时/算子耗时占比等信息。 | |||

+ 34

- 72

README.md

View File

| @@ -4,24 +4,27 @@ | |||

| ## 简介 | |||

| MSAdapter是MindSpore适配PyTorch接口的工具,其目的是在不改变原有PyTorch用户的使用习惯情况下,使得PyTorch代码能在昇腾上获得高效性能. | |||

| <p align="center"><img src="https://openi.pcl.ac.cn/laich/pose_data/raw/branch/master/MSA_F.png" width="580"\></p> | |||

| MSAdapter是将PyTorch训练脚本高效迁移至MindSpore框架执行的工具,其目的是在不改变原有PyTorch用户的使用习惯情况下,使得PyTorch代码能在昇腾上获得高效性能。 | |||

| <p align="center"><img src="doc/pic/MSA_F.png" width="580"\></p> | |||

| - PyTorch接口支持: MSAdapter的目的是支持PyTorch语法的原生态表达,用户只需要将PyTorch源代码中```import torch```替换为```import ms_adapter.pytorch```即可实现模型能支持昇腾上训练。模型中所使用的高阶APIs支持状态可以从这里找到 [Supported List](SupportedList.md) | |||

| - PyTroch接口支持范围: MSAdapter目前主要适配PyTorch的数据处理和模型结构部分代码,目前完全支持MindSpore的PYNATIVE模式下训练,部分网络结构支持GRAPH模式训练。训练过程部分代码需要用户自定义编写具体使用和需要修改的地方可以参考[迁移示例](https://openi.pcl.ac.cn/OpenI/MSAdapterModelZoo/src/branch/master/official/cv/alexnet) | |||

| - **PyTorch接口支持**: MSAdapter的目的是支持PyTorch语法的原生态表达,用户只需要将PyTorch源代码中```import torch```替换为```import msadapter.pytorch```即可实现模型能支持昇腾上训练。模型中所使用的高阶APIs支持状态可以从这里找到 [Supported List](SupportedList.md)。 | |||

| - **PyTorch接口支持范围**: MSAdapter目前主要适配PyTorch的数据处理和模型结构部分代码,目前完全支持MindSpore的PYNATIVE模式下训练,部分网络结构支持GRAPH模式训练。 | |||

| - **TorchVision接口支持**: MSAdapter TorchVision是迁移自PyTorch官方实现的计算机视觉工具库,延用PyTorch官方api设计与使用习惯,内部计算调用MindSpore算子,实现与torchvision原始库同等功能。用户只需要将PyTorch源代码中```import torchvision```替换为```import msadapter.torchvision```即可。TorchVision支持状态可以从这里找到 [TorchVision Supported List](msadapter/torchvision/TorchVision_SupportedList.md)。 | |||

| ## 安装 | |||

| 首先查看[版本说明](#版本说明)选择所需的MSAdapter和MindSpore版本。 | |||

| ### 安装MindSpore | |||

| 请根据MindSpore官网[安装指南](https://www.mindspore.cn/install),安装2.0.0及以上版本的MindSpore。 | |||

| 请根据MindSpore官网[安装指南](https://www.mindspore.cn/install) 进行安装。 | |||

| ### 安装MSAdapter | |||

| #### 通过pip安装 (待版本发布后) | |||

| #### 通过pip安装 | |||

| ```bash | |||

| pip install ms_adapter | |||

| pip install msadapter | |||

| ``` | |||

| #### 通过源码安装 | |||

| ```bash | |||

| git clone https://git.openi.org.cn/OpenI/MSAdapter.git | |||

| @@ -33,78 +36,37 @@ pip install ms_adapter | |||

| python setup.py install --user || exit 1 | |||

| ``` | |||

| ## 使用 | |||

| 在数据处理和模型构建上,MSAdapter可以和PyTorch一样使用,模型训练部分代码需要自定义,示例如下: | |||

| 参考[MSAdapter用户使用指南](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/USER_GUIDE.md),您将快速入门完成PyTorch原生代码的迁移,以及上手各种进阶优化手段;如果您有对精度和性能调优的需求可参考[MSAdapter调试调优指南](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/Debugging_and_Tuning.md)。 | |||

| ### 1.数据处理(仅修改导入包) | |||

| ```python | |||

| from ms_adapter.pytorch.utils.data import DataLoader | |||

| from ms_adapter.torchvision import datasets, transforms | |||

| ## 资源 | |||

| - 模型库:MSAdapter支持丰富的深度学习应用,这里给出了从PyTorch官方代码迁移到MSAdapter模型。[已验证模型资源](https://git.openi.org.cn/OpenI/MSAdapterModelZoo) | |||

| transform = transforms.Compose([transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), | |||

| transforms.ToTensor(), | |||

| transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.247, 0.2435, 0.2616]) | |||

| ]) | |||

| train_images = datasets.CIFAR10('./', train=True, download=True, transform=transform) | |||

| train_data = DataLoader(train_images, batch_size=128, shuffle=True, num_workers=2, drop_last=True) | |||

| ## 版本说明 | |||

| ``` | |||

| ### 2.模型构建(仅修改导入包) | |||

| ```python | |||

| from ms_adapter.pytorch.nn import Module, Linear, Flatten | |||

| class MLP(Module): | |||

| def __init__(self): | |||

| super(MLP, self).__init__() | |||

| self.flatten = Flatten() | |||

| self.line1 = Linear(in_features=1024, out_features=64) | |||

| self.line2 = Linear(in_features=64, out_features=128, bias=False) | |||

| self.line3 = Linear(in_features=128, out_features=10) | |||

| def forward(self, inputs): | |||

| x = self.flatten(inputs) | |||

| x = self.line1(x) | |||

| x = self.line2(x) | |||

| x = self.line3(x) | |||

| return x | |||

| ``` | |||

| ### 3.模型训练(自定义训练) | |||

| ```python | |||

| import ms_adapter.pytorch as torch | |||

| import ms_adapter.pytorch.nn as nn | |||

| import mindspore as ms | |||

| net = MLP() | |||

| net.train() | |||

| epochs = 500 | |||

| criterion = nn.CrossEntropyLoss() | |||

| optimizer = torch.optim.SGD(net.parameters(), lr=0.01, momentum=0.9, weight_decay=0.0005) | |||

| # 定义训练过程 | |||

| loss_net = ms.nn.WithLossCell(net, criterion) | |||

| train_net = ms.nn.TrainOneStepCell(loss_net, optimizer) | |||

| for i in range(epochs): | |||

| for X, y in train_data: | |||

| res = train_net(X, y) | |||

| print("epoch:{}, loss:{:.6f}".format(i, res.asnumpy())) | |||

| # 模型保存 | |||

| ms.save_checkpoint(net, "save_path.ckpt") | |||

| ``` | |||

| | **分支名** | **发布版本** | **发布时间** | **配套MindSpore版本** | 启智算力资源 | | |||

| |--------------|----------------|--------------------|-------------------------|------------------------------------------------| | |||

| | **release_0.1** | 0.1 | 2023-06-15 | [MindSpore 2.0.0](https://www.mindspore.cn/install) | [智算网络集群](https://openi.pcl.ac.cn/OpenI/MSAdapter/grampus/notebook/create?type=1) - 镜像:mindspore2.0rc_cann6.3_notebook | | |||

| | **release_0.1rc** | 0.1rc | 2023-04-23 | [MindSpore 2.0.0rc1](https://www.mindspore.cn/versions) | [智算网络集群](https://openi.pcl.ac.cn/OpenI/MSAdapter/grampus/notebook/create?type=1) - 镜像:mindspore2.0rc_cann6.3_notebook | | |||

| | **release_0.1beta** | 0.1beta | 2023-03-27 | [MindSpore Nightly(0205)](https://openi.pcl.ac.cn/attachments/63457dd2-5eb3-4a6b-a4e4-41b6dca8d0e9?type=0) | - | | |||

| | **master** | - | - | [MindSpore 2.0.0](https://www.mindspore.cn/install) | - | | |||

| - MSAdapter已发布版本获取请参阅[RELEASE](https://openi.pcl.ac.cn/OpenI/MSAdapter/releases)。 | |||

| - MindSpore版本推荐从[MindSpore官网](https://www.mindspore.cn/versions)获取,或者从启智平台[数据资源](https://openi.pcl.ac.cn/OpenI/MSAdapter/datasets)中获取。 | |||

| ## 正在进行的工作 | |||

| - 支持更多torch的接口。 | |||

| - 支持torchaudio数据处理接口。 | |||

| - 性能优化。 | |||

| ## 资源 | |||

| - 模型库:MSAdapter支持丰富的深度学习应用,这里给出了从PyTorch官方代码迁移到MSAdapter模型。[已验证模型资源](https://git.openi.org.cn/OpenI/MSAdapterModelZoo) | |||

| ## 贡献 | |||

| 欢迎开发者参与贡献。更多详情,请参阅我们的[贡献指南](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/CONTRIBUTING_CN.md). | |||

| ## 加入我们 | |||

| 如果您在使用时有任何问题或建议,欢迎加入MSAdapter SIG参与讨论。 | |||

| <p align="leaf"><img src="doc/pic/MSA_SIG.png" width="580"\></p> | |||

| ## 许可证 | |||

| [Apache License 2.0](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/LICENSE) | |||

| ## FAQ | |||

| Q:设置context.set_context(mode=context.GRAPH_MODE)后运行出现类似问题:`Tensor.add_` is an in-place operation and "x.add_()" is not encouraged to use in MindSpore static graph mode. Please use "x = x.add()" or other API instead。 | |||

| A:目前在设置GRAPH模式下不支持原地操作相关的接口,需要按照提示信息进行修改。需要注意的是,即使在PYNATIVE模式下,原地操作相关接口也是不鼓励使用的,因为目前在MSAdapter不会带来内存收益,而且会给反向梯度计算带来不确定性。 | |||

| Q:运行代码出现类似报错信息:AttributeError: module 'ms_adapter.pytorch' has no attribute 'xxx'。 | |||

| A:首先确定'xxx'是否为torch 1.12版本支持的接口,PyTorch官网明确已废弃或者即将废弃的接口和参数,MSAdapter不会兼容支持,请使用其他同等功能的接口代替。如果是PyTorch对应版本支持,而MSAdapter中暂时没有,欢迎参与[MSAdapter项目](https://openi.pcl.ac.cn/OpenI/MSAdapter)贡献你的代码,也可以通过[创建任务(New issue)](https://openi.pcl.ac.cn/OpenI/MSAdapter/issues/new)反馈需求。 | |||

+ 96

- 0

README.rst

View File

| @@ -0,0 +1,96 @@ | |||

| Introduction | |||

| ============= | |||

| MSAdapter is MindSpore tool for adapting the PyTorch interface, which is designed to make PyTorch code perform efficiently on Ascend without changing the habits of the original PyTorch users. | |||

| |MSAdapter-architecture| | |||

| Install | |||

| ======= | |||

| MSAdapter has some prerequisites that need to be installed first, including MindSpore, PIL, NumPy. | |||

| .. code:: bash | |||

| # for last stable version | |||

| pip install msadapter | |||

| # for latest release candidate | |||

| pip install --upgrade --pre msadapter | |||

| Alternatively, you can install the latest or development version by directly pulling from OpenI: | |||

| .. code:: bash | |||

| pip3 install git+https://openi.pcl.ac.cn/OpenI/MSAdapter.git | |||

| User guide | |||

| =========== | |||

| For data processing and model building, MSAdapter can be used in the same way as PyTorch, while the model training part of the code needs to be customized, as shown in the following example. | |||

| 1. Data processing (only modify the import package) | |||

| .. code:: python | |||

| from msadapter.pytorch.utils.data import DataLoader | |||

| from msadapter.torchvision import datasets, transforms | |||

| transform = transforms.Compose([transforms.Resize((224, 224), interpolation=InterpolationMode.BICUBIC), | |||

| transforms.ToTensor(), | |||

| transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.247, 0.2435, 0.2616]) | |||

| ]) | |||

| train_images = datasets.CIFAR10('./', train=True, download=True, transform=transform) | |||

| train_data = DataLoader(train_images, batch_size=128, shuffle=True, num_workers=2, drop_last=True) | |||

| 2. Model construction (modify import package only) | |||

| .. code:: python | |||

| from msadapter.pytorch.nn import Module, Linear, Flatten | |||

| class MLP(Module): | |||

| def __init__(self): | |||

| super(MLP, self).__init__() | |||

| self.flatten = Flatten() | |||

| self.line1 = Linear(in_features=1024, out_features=64) | |||

| self.line2 = Linear(in_features=64, out_features=128, bias=False) | |||

| self.line3 = Linear(in_features=128, out_features=10) | |||

| def forward(self, inputs): | |||

| x = self.flatten(inputs) | |||

| x = self.line1(x) | |||

| x = self.line2(x) | |||

| x = self.line3(x) | |||

| return x | |||

| 3.Model training (custom training) | |||

| .. code:: python | |||

| import msadapter.pytorch as torch | |||

| import msadapter.pytorch.nn as nn | |||

| import mindspore as ms | |||

| net = MLP() | |||

| net.train() | |||

| epochs = 500 | |||

| criterion = nn.CrossEntropyLoss() | |||

| optimizer = ms.nn.SGD(net.trainable_params(), learning_rate=0.01, momentum=0.9, weight_decay=0.0005) | |||

| # Define the training process | |||

| loss_net = ms.nn.WithLossCell(net, criterion) | |||

| train_net = ms.nn.TrainOneStepCell(loss_net, optimizer) | |||

| for i in range(epochs): | |||

| for X, y in train_data: | |||

| res = train_net(X, y) | |||

| print("epoch:{}, loss:{:.6f}".format(i, res.asnumpy())) | |||

| # Save model | |||

| ms.save_checkpoint(net, "save_path.ckpt") | |||

| License | |||

| ======= | |||

| MSAdapter is released under the Apache 2.0 license. | |||

| .. |MSAdapter-architecture| image:: https://openi.pcl.ac.cn/laich/pose_data/raw/branch/master/MSA_F.png | |||

+ 66

- 0

README_en.md

View File

| @@ -0,0 +1,66 @@ | |||

| # MSAdapter | |||

| [简体中文](README.md) | [English] | |||

| ## Introduction | |||

| MSAdapter is MindSpore tool for adapting the PyTorch interface, which is designed to make PyTorch code perform efficiently on Ascend without changing the habits of the original PyTorch users. | |||

| <p align="center"><img src="https://openi.pcl.ac.cn/laich/pose_data/raw/branch/master/MSA_F.png" width="580"\></p> | |||

| - **PyTorch interface support**: MSAdapter aims to support the original expression of PyTorch syntax, users just need to replace ``import torch`` in PyTorch source code with ``import msadapter.pytorch`` to realize that the model can support training on ascending. The support status of the higher-order APIs used in the model can be found here [Supported List](SupportedList_en.md). | |||

| - **PyTorch interface support scope**: MSAdapter is currently mainly adapted to PyTorch data processing and model structure part of the code, currently fully supports MindSpore's PYNATIVE mode training, part of the network structure support GRAPH mode training. | |||

| - **TorchVision interface support**: MSAdapter TorchVision is a computer vision tool library migrated from PyTorch's official implementation. It continues to use PyTorch's official api design, and calls `MindSpore` operators for calculations to achieve the same functions as the original `torchvision` library. Users only need to replace ```import torchvision``` in the PyTorch source code with ```import msadapter.torchvision```. | |||

| TorchVision support status can be found from here [TorchVision Supported List](msadapter/torchvision/TorchVision_SupportedList_en.md) | |||

| ## Install | |||

| Please check the [Version Description](#Version-Description) to select the required version of MSAdapter and MindSpore. | |||

| ### Install MindSpore | |||

| Please install MindSpore according to the [Installation Guide](https://www.mindspore.cn/install/en) on MindSpore official website. | |||

| ### Install MSAdapter | |||

| #### via pip | |||

| ```bash | |||

| pip install msadapter | |||

| ``` | |||

| #### via source code | |||

| ```bash | |||

| git clone https://git.openi.org.cn/OpenI/MSAdapter.git | |||

| cd MSAdapter | |||

| python setup.py install | |||

| ``` | |||

| If there is an insufficient permissions message, install as follows | |||

| ```bash | |||

| python setup.py install --user || exit 1 | |||

| ``` | |||

| ## User guide | |||

| Refer to the [User Guide](USER_GUIDE.md), you will quickly get started and complete the transformation from PyTorch code, as well as get started with various advanced optimization skills; More over, if you have requirements for precision and performance tuning, please refer to the [Debugging and Tuning Guide](Debugging_and_Tuning.md). | |||

| ## Resources | |||

| - Model library: MSAdapter supports rich deep learning applications, migration to MSAdapter models from the official PyTorch code is given here. [Model Resources](https://git.openi.org.cn/OpenI/MSAdapterModelZoo). | |||

| ## Version Description | |||

| | **Branch** | **Version** | **Initial Release Date** | **MindSpore Version** | OpenI Computing Resources | | |||

| |--------------|----------------|------------------------|-------------------------|-----------------| | |||

| | **release_0.1** | 0.1 | 2023-06-15 | [MindSpore 2.0.0](https://www.mindspore.cn/install/en) | [China Computing NET](https://openi.pcl.ac.cn/OpenI/MSAdapter/grampus/notebook/create?type=1) - Image:mindspore2.0rc_cann6.3_notebook | | |||

| | **release_0.1rc** | 0.1rc | 2023-04-23 | [MindSpore 2.0.0rc1](https://www.mindspore.cn/versions/en) | [China Computing NET](https://openi.pcl.ac.cn/OpenI/MSAdapter/grampus/notebook/create?type=1) - Image:mindspore2.0rc_cann6.3_notebook | | |||

| | **release_0.1beta** | 0.1beta | 2023-03-27 | [MindSpore Nightly(0205)](https://openi.pcl.ac.cn/attachments/63457dd2-5eb3-4a6b-a4e4-41b6dca8d0e9?type=0) | - | | |||

| | **master** | - | - | [MindSpore 2.0.0](https://www.mindspore.cn/install)| - | | |||

| - For the released version of MSAdapter, please refer to [RELEASE](https://openi.pcl.ac.cn/OpenI/MSAdapter/releases). | |||

| - The MindSpore is recommended to be obtained from the [MindSpore official website](https://www.mindspore.cn/versions/en) or from our [data resources](https://openi.pcl.ac.cn/OpenI/MSAdapter/datasets). | |||

| ## On Going and Future Work | |||

| - More APIs of torch will be supported. | |||

| - Datasets APIs of torchaudio will be supported. | |||

| - Performance optimization. | |||

| ## Contributing | |||

| Developers are welcome to contribute. For more details, please see our [Contribution Guidelines](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/CONTRIBUTING_CN.md). | |||

| ## License | |||

| [Apache License 2.0](https://openi.pcl.ac.cn/OpenI/MSAdapter/src/branch/master/LICENSE) | |||

+ 1172

- 91

SupportedList.md

View File

| @@ -1,100 +1,1181 @@ | |||

| ## List of PyTorch APIs supported by MSAdapter | |||

| | MSAdapter APIs | Status | Notes | | |||

| | --------------- | -------------------- | -------------- | | |||

| | Conv1d | Supported| Pad支持不完善,权重不对齐,需要给出扩展为二维权重| | |||

| | Conv2d | Supported| /| | |||

| | Conv3d | Supported|Pad支持不完善 | | |||

| | ConvTranspose1d |Supported |output_padding参数不支持、pad类型支持不完备 | | |||

| | ConvTranspose2d |Supported |output_padding参数不支持、pad类型支持不完备 | | |||

| | ConvTranspose3d |Supported |output_padding参数不支持、pad类型支持不完备 | | |||

| | Linear | Supported | /| | |||

| | MaxPool1d | Supported|/| | |||

| | AvgPool1d | Supported|/| | |||

| | MaxPool2d | Supported|/| | |||

| | AvgPool2d | Supported|/| | |||

| | MaxPool3d | Supported|/| | |||

| | AvgPool3d | Supported|/| | |||

| | AdaptiveAvgPool1d | Supported| /| | |||

| | AdaptiveAvgPool2d | Supported| /| | |||

| | AdaptiveAvgPool3d | Supported| /| | |||

| | AdaptiveMaxPool1d | Supported| /| | |||

| | AdaptiveMaxPool2d | Supported|/| | |||

| | AdaptiveMaxPool3d | Supported| /| | |||

| | Embedding |Supported | scale_grad_by_freq、sparse参数不支持| | |||

| | Flatten | Supported| /| | |||

| | Unflatten| Supported| /| | |||

| | Dropout | Supported| /| | |||

| |Dropout2D|Supported|/| | |||

| |Dropout3D|Supported|/| | |||

| | BatchNorm1d | Supported| /| | |||

| | BatchNorm2d | Supported| /| | |||

| | BatchNorm3d |Supported | /| | |||

| | PRelu | Pending| /| | |||

| | ReLU |Supported| /| | |||

| | Tanh |Supported| /| | |||

| | Sigmoid |Supported| /| | |||

| | LeakyRelu Supported|| /| | |||

| | Softplus |Supported| /| | |||

| | ReLU6 | Supported| /| | |||

| | LeakyReLU6 |Supported| /| | |||

| |Hardtanh|Supported|/| | |||

| |Hardswish|Supported|/| | |||

| | Mish |Supported| /| | |||

| | Softmax |Supported| /| | |||

| | Elu |Supported | /| | |||

| | RNN | Pending| /| | |||

| | RNNCell | Pending| /| | |||

| | LSTM | Pending| /| | |||

| | LSTMCell | Pending| /| | |||

| | GRU | Pending| /| | |||

| | GRUCell | Pending| /| | |||

| | FractionalMaxPool2d| Supported| /| | |||

| | FractionalMaxPool3d| Supported| /| | |||

| | LPPool1d| Supported| /| | |||

| | LPPool2d| Supported| /| | |||

| | ReflectionPad1d| Supported| /| | |||

| | ReflectionPad2d| Supported| /| | |||

| | ReflectionPad3d| Supported| /| | |||

| | ReplicationPad2d| Supported| /| | |||

| | ReplicationPad3d| Supported| /| | |||

| | ConstantPad1d| Supported| /| | |||

| | ConstantPad2d| Supported| /| | |||

| | ConstantPad3d| Supported| /| | |||

| | Tanhshrink| Supported| /| | |||

| | Threshold| Supported| /| | |||

| | GLU| Supported| /| | |||

| | Softmin| Supported| /| | |||

| | LogSoftmax| Supported| /| | |||

| | SyncBatchNorm| Supported| /| | |||

| | GroupNorm| Supported| 只支持2D| | |||

| | LayerNorm| Supported| /| | |||

| | AlphaDropout| Supported| /| | |||

| | FeatureAlphaDropout| Supported| /| | |||

| | CosineSimilarity| Supported| /| | |||

| | PairwiseDistance| Supported| /| | |||

| | L1Loss| Supported| /| | |||

| | MSELoss| Supported| /| | |||

| | CrossEntropyLoss| Supported| /| | |||

| | NLLLoss| Supported| /| | |||

| | BCELoss| Supported| /| | |||

| | BCEWithLogitsLoss| Supported| /| | |||

| | HuberLoss| Supported| /| | |||

| | SmoothL1Loss| Supported| /| | |||

| | SoftMarginLoss| Supported| /| | |||

| | CosineEmbeddingLoss| Supported| /| | |||

| | MultiMarginLoss| Supported| /| | |||

| | TripletMarginLoss| Supported| /| | |||

| | Upsample| Supported| /| | |||

| | UpsamplingNearest2d| Supported| /| | |||

| | UpsamplingBilinear2d| Supported| /| | |||

| | | | | | |||

| | | | | | |||

| 简体中文 | [English](SupportedList_en.md) | |||

| - [MSAdapter支持API清单](#jump1) | |||

| - [Torch](#jump2) | |||

| - [Tensor](#jump3) | |||

| - [Torch.nn](#jump4) | |||

| - [nn.functional](#jump5) | |||

| - [torch.linalg](#jump6) | |||

| - [torch.optim](#jump7) | |||

| ### <span id="jump8">通用限制</span> | |||

| - 不支持`layout`, `device`, `requires_grad`, `memory_format`参数的配置功能。 | |||

| - 不支持通过`Generator`参数管理生成伪随机数的算法的状态。 | |||

| - 不支持七维及以上的计算。 | |||

| - 复数类型的支持正在完善。 | |||

| - Ascend上对float64类型的输入支持受限,部分接口无法处理float64类型入参,需转换为float32或float16类型之后输入。 | |||

| - [PyTorch中具有视图操作的接口](https://pytorch.org/docs/1.12/tensor_view.html)功能受限,当前输入和输出张量不共享底层数据,而会进行数据拷贝。 | |||

| - 在Ascend和GPU上,部分数据类型(如int16和int32)在溢出的场景下,mindspore和pytorch处理的结果存在差异,因此不建议对具有类型限制的入参进行超出上限或下限的赋值,也不建议对明显超过数据类型的数据向范围更小的数据类型进行转换,以免获得预期之外的结果。 | |||

| - 下表中存在”功能存在限制“标注的接口,请查看[接口约束列表](ConstraintList.md),获取详细信息。 | |||

| ## <span id="jump1">MSAdapter支持API清单</span> | |||

| ### <span id="jump2">Torch</span> | |||

| | MSAdapter接口 | 状态 | 约束 | | |||

| | --------------- | -------------------- | -------------- | | |||

| | torch.is_tensor | 支持 | | | |||

| | torch.is_floating_point | 支持 | | | |||

| | torch.arange | 支持 | | | |||

| | torch.cat | 支持 | | | |||

| | torch.tensor | 支持 | | | |||

| | torch.as_tensor | 支持 | | | |||

| | torch.from_numpy | 支持 | | | |||

| | torch.frombuffer | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.permute | 支持 | | | |||

| | torch.bitwise_left_shift | 支持 | | | |||

| | torch.bitwise_right_shift | 支持 | | | |||

| | torch.nan_to_num | 支持 | | | |||

| | torch.range | 支持 | | | |||

| | torch.linspace | 支持 | | | |||

| | torch.logspace | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.eye | 支持 | | | |||

| | torch.empty | 支持 | | | |||

| | torch.empty_like | 支持 | | | |||

| | torch.eig | 部分支持 | 暂不支持GPU后端 | | |||

| | torch.full | 支持 | | | |||

| | torch.full_like | 支持 | | | |||

| | torch.polar | 支持 | | | |||

| | torch.concat | 支持 | | | |||

| | torch.column_stack | 支持 | | | |||

| | torch.gather | 支持 | | | |||

| | torch.is_complex | 支持 | | | |||

| | torch.hstack | 支持 | | | |||

| | torch.index_select | 支持 | | | |||

| | torch.masked_select | 支持 | | | |||

| | torch.movedim | 支持 | | | |||

| | torch.moveaxis | 支持 | | | |||

| | torch.narrow | 支持 | | | |||

| | torch.nonzero | 支持 | | | |||

| | torch.numel | 支持 | | | |||

| | torch.reshape | 支持 | | | |||

| | torch.row_stack | 支持 | | | |||

| | torch.select | 支持 | | | |||

| | torch.zeros | 支持 | | | |||

| | torch.squeeze | 支持 | | | |||

| | torch.stack | 支持 | | | |||

| | torch.swapaxes | 支持 | | | |||

| | torch.swapdims | 支持 | | | |||

| | torch.zeros_like | 支持 | | | |||

| | torch.take | 支持 | | | |||

| | torch.ones | 支持 | | | |||

| | torch.tile | 支持 | | | |||

| | torch.transpose | 支持 | | | |||

| | torch.unbind | 支持 | | | |||

| | torch.unsqueeze | 支持 | | | |||

| | torch.ones_like | 支持 | | | |||

| | torch.vstack | 支持 | | | |||

| | torch.heaviside | 支持 | | | |||

| | torch.seed | 支持 | | | |||

| | torch.initial_seed | 支持 | | | |||

| | torch.rand | 支持 | | | |||

| | torch.randn | 支持 | | | |||

| | torch.abs | 支持 | | | |||

| | torch.absolute | 支持 | | | |||

| | torch.acos | 支持 | | | |||

| | torch.adjoint | 支持 | | | |||

| | torch.acosh | 支持 | | | |||

| | torch.arccosh | 支持 | | | |||

| | torch.add | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.addcdiv | 支持 | | | |||

| | torch.addcmul | 支持 | | | |||

| | torch.dsplit | 支持 | | | |||

| | torch.asin | 支持 | | | |||

| | torch.arcsin | 支持 | | | |||

| | torch.asinh | 支持 | | | |||

| | torch.arcsinh | 支持 | | | |||

| | torch.atan | 支持 | | | |||

| | torch.arctan | 支持 | | | |||

| | torch.atanh | 支持 | | | |||

| | torch.arctanh | 支持 | | | |||

| | torch.atan2 | 支持 | | | |||

| | torch.arctan2 | 支持 | | | |||

| | torch.bitwise_not | 支持 | | | |||

| | torch.bitwise_and | 支持 | | | |||

| | torch.bitwise_or | 支持 | | | |||

| | torch.bitwise_xor | 支持 | | | |||

| | torch.hsplit | 支持 | | | |||

| | torch.split | 支持 | | | |||

| | torch.ceil | 支持 | | | |||

| | torch.t | 支持 | | | |||

| | torch.tensor_split | 支持 | | | |||

| | torch.conj_physical | 支持 | | | |||

| | torch.copysign | 支持 | | | |||

| | torch.cos | 支持 | | | |||

| | torch.cosh | 支持 | | | |||

| | torch.deg2rad | 支持 | | | |||

| | torch.device | 支持 | | | |||

| | torch.div | 支持 | | | |||

| | torch.divide | 支持 | | | |||

| | torch.erf | 支持 | | | |||

| | torch.erfc | 支持 | | | |||

| | torch.erfinv | 支持 | | | |||

| | torch.exp | 支持 | | | |||

| | torch.exp2 | 支持 | | | |||

| | torch.expm1 | 支持 | | | |||

| | torch.fix | 支持 | | | |||

| | torch.vsplit | 支持 | | | |||

| | torch.floor | 支持 | | | |||

| | torch.floor_divide | 支持 | | | |||

| | torch.where | 支持 | | | |||

| | torch.frac | 支持 | | | |||

| | torch.frexp | 支持 | | | |||

| | torch.finfo | 支持 | | | |||

| | torch.iinfo | 支持 | | | |||

| | torch.ldexp | 支持 | | | |||

| | torch.lerp | 支持 | | | |||

| | torch.arccos | 支持 | | | |||

| | torch.log | 支持 | | | |||

| | torch.angle | 支持 | | | |||

| | torch.log1p | 支持 | | | |||

| | torch.clamp | 支持 | | | |||

| | torch.logaddexp | 支持 | | | |||

| | torch.logaddexp2 | 支持 | | | |||

| | torch.logical_not | 支持 | | | |||

| | torch.logical_or | 支持 | | | |||

| | torch.logit | 支持 | | | |||

| | torch.clip | 支持 | | | |||

| | torch.float_power | 部分支持 | [输入参数有限制](ConstraintList.md) | | |||

| | torch.igammac | 支持 | | | |||

| | torch.mul | 支持 | | | |||

| | torch.fmod | 支持 | | | |||

| | torch.lgamma | 部分支持 | [输入参数有限制](ConstraintList.md) | | |||

| | torch.neg | 支持 | | | |||

| | torch.log10 | 支持 | | | |||

| | torch.nextafter | 部分支持 | [输入参数有限制](ConstraintList.md) | | |||

| | torch.positive | 支持 | | | |||

| | torch.pow | 支持 | | | |||

| | torch.rad2deg | 支持 | | | |||

| | torch.log2 | 支持 | | | |||

| | torch.hypot | 支持 | | | |||

| | torch.remainder | 支持 | | | |||

| | torch.round | 支持 | | | |||

| | torch.sigmoid | 支持 | | | |||

| | torch.multiply | 支持 | | | |||

| | torch.negative | 支持 | | | |||

| | torch.sin | 支持 | | | |||

| | torch.reciprocal | 支持 | | | |||

| | torch.sinh | 支持 | | | |||

| | torch.sqrt | 支持 | | | |||

| | torch.roll | 支持 | | | |||

| | torch.rot90| 支持 | | | |||

| | torch.square | 支持 | | | |||

| | torch.sub | 支持 | | | |||

| | torch.rsqrt | 支持 | | | |||

| | torch.tan | 支持 | | | |||

| | torch.tanh | 支持 | | | |||

| | torch.sign | 支持 | | | |||

| | torch.trunc | 支持 | | | |||

| | torch.xlogy | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.amax | 支持 | | | |||

| | torch.amin | 支持 | | | |||

| | torch.aminmax | 支持 | | | |||

| | torch.all | 支持 | | | |||

| | torch.any | 支持 | | | |||

| | torch.min | 支持 | | | |||

| | torch.dist | 支持 | | | |||

| | torch.logsumexp | 支持 | | | |||

| | torch.nanmean | 支持 | | | |||

| | torch.nansum | 支持 | | | |||

| | torch.prod | 支持 | | | |||

| | torch.qr | 支持 | | | |||

| | torch.std | 支持 | | | |||

| | torch.sgn | 支持 | | | |||

| | torch.unique_consecutive | 支持 | | | |||

| | torch.var | 支持 | | | |||

| | torch.count_nonzero | 支持 | | | |||

| | torch.allclose | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.signbit | 支持 | | | |||

| | torch.eq | 支持 | | | |||

| | torch.equal | 支持 | | | |||

| | torch.ge | 支持 | | | |||

| | torch.greater_equal | 支持 | | | |||

| | torch.gt | 支持 | | | |||

| | torch.greater | 支持 | | | |||

| | torch.isclose | 部分支持 | [功能存在限制](ConstraintList.md) | | |||

| | torch.isfinite | 支持 | | | |||

| | torch.isin | 支持 | | | |||

| | torch.isinf | 支持 | | | |||

| | torch.isposinf | 支持 | | | |||

| | torch.isneginf | 支持 | | | |||

| | torch.isnan | 支持 | | | |||

| | torch.isreal | 支持 | | | |||

| | torch.is_nonzero | 支持 | | | |||

| | torch.le | 支持 | | | |||